Get Complete Project Material File(s) Now! »

Consideration of the attacker model

The dependence on the input distribution is a fundamental issue in the definition of se-curity protocols. Ideally, the degree of protection guaranteed by a protocol should be high enough to protect against the expected attacker model without raising the com-plexity of the system higher than what can be handled effectively. This is all the more relevant in today’s cryptosystems such as RSA which rely on computational-theoretic (also known as cryptographic) security rather than information-theoretic security, i.e., the security only holds because decryption is intractable with current technologies. In real systems, a trade-off between security and complexity must therefore be met, which depends on the specific application of the protocol under concern. In this sec-tion, we will review some of the approaches which have been developed to take into account the attacker model in the security protocol.

Entropy measures Kopf¨ and Basin expressed an attacker’s remaining uncertainty about a secret as a function of the number of side-channel measurements made [KB07].

Several information-theoretic entropy measures were proposed to quantify the remain-ing uncertainty of the attacker. One of the motivations of the authors was to address a wide range of systems with different models of attackers, thus requiring different types of entropy. As discussed in [Mas94, Pli00, Cac97], the different notions are partially incomparable. Still, Kopf¨ and Basin gave interesting intuitive meanings of the different definitions in terms of guesses of the attacker. The first measure H(X), Shannon Entropy [Sha48], corresponded to a lower bound for the average number of binary questions that needed to be asked to determine the value of the random vari-able X. In case the attacker had already some knowledge, the conditional entropy H(X|Y ) was used, which expressed the remaining uncertainty of the attacker with prior knowledge Y . The second measure, the Guessing Entropy G(X) [Mas94] cor-responded to the average number of questions of the kind ”does X = x hold?” that had to be asked to guess the value of X. A generalization of this notion gave the third measure Marginal Guesswork Wα(X) [Pli00] which, for a fixed α ∈ [0, 1], quantified the number of questions of the kind ”does X = x hold?” that had to be asked until the value of X was correctly determined with a chance of success given by α. Condi-tional entropies could also be defined for guessing entropy and marginal guesswork. While lower bounds for G(X) could be given in terms of H(X), there was no general upper bound for G(X) in terms of H(X) and it was proven that no general inequality related Shannon entropy with marginal guesswork [Pli00].

Belief-based approach Recently, Clarkson et al. defined as information leakage the difference between the a priori accuracy of the guess of the attacker, and the a poste-riori one, after the attacker had made his observation [CMS08]. The accuracy of the guess was defined as the Kullback-Leibler distance between the belief (which was a weight attributed by the attacker to each input hypothesis) and the true distribution on the hypotheses. The reliability of this probabilistic belief-based approach (compared to e.g., a worst-case strategy) is however hard to evaluate, as different attackers may have different beliefs.

Absolute leakage and rate of leakage A recent effort in the specification of the attacker model was subsequently given by Boreale who studied the quantitative mod-els of information leakage in process calculi using pi-calculus, and who defined two different quantitative notions of information leakage differing essentially in the as-sumptions made on the power of the attacker [Bor09].

First, an attacker with full control over the process was assumed, who knew the program code and could conduct any number of tries over it. Moreover, the attacker could know the probability distribution of the inputs (given as random variable X) as well as other ”side information” publicly available and modelled as random vari-able Z. This corresponded therefore to a worst-case of attacker, i.e., an attacker with unlimited computational resources which allowed to define security guarantees inde-pendent from the computational power of the actual attacker. The leakage of interest was in this case the absolute leakage, i.e., the average amount of information that was leaked to the attacker by the program under these assumptions. As expected, and following the earlier results of Millen [Mil87] and Gray [III91], absolute leak-age coincided with the conditional mutual information I = H(X|Y ) − H(X|Y, Z), where Z = P (X, Y ) represented the outputs, i.e., the ”observational behaviour” of the protocol. As described previously, Clark et al. also considered conditional mutual information as measure of leakage [CHM07] but the main difference here was the consideration of concurrent programs by Boreale rather than sequential (and necessarily terminating) computations.

A more realistic situation was then considered, in which the resources of the at-tacker were limited and a notion of cost was introduced. More precisely, an attacker could only perform a predefined number n of tries over the protocol, each yielding a binary answer representing success of failure. The leakage of interest in this case was the leakage rate, i.e., the maximal number of bits of information that could be obtained per experiment over the protocol.

Boreale also studied the relation between these notions of leakage and proved that they were consistent, i.e the absolute leakage coincided with the maximum amount of information about the inputs that could be extracted by repeated experiments on the protocol, and that the cost the adversary had to pay to achieve it was at least the absolute leakage divided by the rate of leakage.

Interestingly, Boreale related his notions of leakage with the probability of er-ror and the guesswork of the adversary. The relations between absolute leakage and these notions could be easily derived from well-known inequalities involving Shan-non’s conditional entropy (from which mutual information could be deduced): Fano’s inequality [CT06] for a lower bound on the probability of error of the attacker and Massey’s [Mas94] and Jensen’s [CT06] inequalities for a lower bound on conditional guesswork.

The author also considered compositionality of leakage, and proved that for both notions a global system could not have a greater leakage (rate) than its individual subsystems, with the exception of parallel composition in the case of leakage rate.

Other previous work on the rate of leakage The notion of rate of leakage had already been introduced in previous works, albeit not that explicitly. Volpano and Smith considered the problem of trying to guess the k-bit value of a secret s using well-typed programs written in a deterministic programming language with match queries [VS00]. Under this formalism, the authors proved that no well-typed program running in time bounded by a polynomial in k could deduce s. Furthermore, if a probability distribution could be specified for s, then choosing the uniform distribution made the probability that a well-typed polynomial-time program could deduce s goes to zero as k increased.

The notion of process similarity developed by Di Pierro, Wiklicky and Hankin [DPHW02] and already mentioned previously replaced the traditional notion of (ab-solute) indistinguishability of processes [RS99] by a quantitative measure of their behavioural difference. Therefore, two behaviours though distinguishable, could still be considered as effectively non-interfering as long as their difference was below a specified threshold. This gave a notion of distance between behaviours which was connected to the number of tries necessary to distinguish them and thus came close to Boreale’s notion of rate of leakage.

Anonymity

Definition and Protocols

In my thesis, I will particularly focus on information flow in the context of anonymity protocols. Anonymity means that the identity of the user performing a certain action is maintained secret. It is an information-hiding property different from confidentiality (also known as secrecy) which consists in keeping secret the content of a message, and from privacy, which is more general and deals with the protection of personal data. One can roughly say that “confidentiality deals with data, while privacy deals with people”, and anonymity can be seen as a specific part of privacy.

Halpern and O’Neill describe in more details the distinction between anonymity and other information-hiding properties [HO03, HO05]. Several different formal def-initions and protocols for anonymity have been defined in the past. They are mainly based on process-calculus [SS96, RS01], epistemic logic [SS99, HO05] or “function views” [HS04].

In this thesis we will focus on the process calculus approach, which has already widely been used in the field of security [AG99, AL00, Low97, Ros95b, Sch96]. In the following, we will briefly review existing work on possibilistic (i.e., nondeterministic) and probabilistic approaches to anonymity, before motivating our choice to use both formalisms together and giving an overview of research related to this ”combined” approach.

Possibilistic versus probabilistic approaches

Possibilistic approach The possibilistic (i.e., purely nondeterministic) approach [SS96, RS01] is based on the so-called principle of confusion: a system is anonymous if the set of the possible outcomes is saturated with respect to the intended anonymous users. This means that for any observable trace produced by an anonymous user dur-ing the computation, there must exist for each other anonymous user an alternative computation which produces the same trace (modulo the identity of the anonymous users). In this approach, a distinction is made between total lack of anonymity and “some” anonymity, but all protocols that provide anonymity to some extent, from the least to the maximal degree, are considered equivalent. However, this is insufficient in most security systems in which it is desirable to be able to measure more precisely “how much” anonymity is preserved. In other words, we are not only interested in knowing whether events are possible or impossible (possibilistic approach), but rather in determining what is their likelihood to occur.

Probabilistic approach Probabilistic definitions of anonymity have already been investigated by several authors ([Cha88, HO03, BP05, RR98, CP05]), who distin-guished different strengths of anonymity, which will be described later in this section.

Several probabilistic anonymity protocols have also been developed in the past, such as the Dining Cryptographers [Cha88], Crowds [RR98], Onion Routing [SGR97] and Freenet [CSWH01]. We will focus here on Crowds and the Dining Cryptographer, which will be used as running example through this thesis.

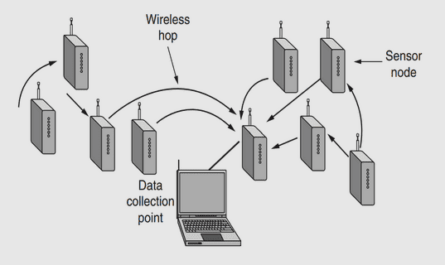

Crowds Crowds was presented by Reiter and Rubin as an anonymity protocol for web transactions [RR98]. It involves a public network represented as a set of nodes (the crowd) which may send messages to each other. The security goal consists in ensuring that any sender remains anonymous, even to the receiver of the message. In order to achieve this goal, a message sent over the network is forwarded randomly until it reaches the receiver.

More precisely, a node which wants to send a message chooses randomly (with uniform probability) a node (possibly himself) in the crowd and sends the message to him. Upon reception of the message, the following node tosses a biased coin and forwards the message if heads is obtained. Otherwise, the message is sent directly to the final receiver.

Figure 1.11: The Crowds protocol (S stands for the sender and R for the receiver of a message)

In this scenario, the receiver of a message can never determine who is the initial sender of the message, who remains therefore anonymous. Even if a node may know who was the previous node in the path, he cannot determine whether this node initiated or just forwarded the message.

This protocol is illustrated on Figure 1.11. As we will see later, the security goal defined by Reiter and Rubin for Crowds is probable innocence, which states that a node appears equally likely to have initiated the message as not to have. The protocol was extended to the case in which some of the nodes are corrupted, i.e., they can collaborate in order to reveal the identity of the initiator of the message.

Dining Cryptographers In the Dining Cryptographers (DC) protocol, proposed by Chaum, and illustrated in Figure 1.12, three cryptographers and a master are dining together and they agree that only one of the four, secretly chosen by the master, will have to pay the bill [Cha88]. At the end of the dinner, the master secretly tells to each cryptographer whether he has to be the payer or not. The cryptographers would like then to find out whether the payer is the master or if it is one of them, but without discovering which cryptographer is the payer in this latter case (i.e., they want the payer to remain anonymous if it is one of the cryptographers).

Chaum gave a solution to this problem: each cryptographer tosses a coin and the result is visible only to him and his right neighbour. Each cryptographer announces then ”agree” if the two coins he can see (his own coin and his left neighbour’s) are both head or both tail, and ”disagree” otherwise. However, if one cryptographer is the payer, he lies and says the opposite.

It can be proven that if the number of ”disagree” is even, then the master is paying. Otherwise, one of the cryptographer is the payer, but his identity is unknown. This result is easy to understand, since if the master is paying (i.e., all cryprographers tell the truth), there will be either zero ”disagree” if the three coins give the same result, or two ”disagree” if one of the coin differ from the two others. If one cryptographer is paying (and thus lies), this will add one to the previous sum, which gives indeed an odd number of ”disagree” in this case.

Combining nondeterminism and probabilities In this thesis we will follow the framework described by Bhargava and Palamidessi in [BP05, Pal06, BP09]. While previous formal definitions of anonymity were either nondeterministic or purely probabilistic, the authors of those papers consider the most general situation in which the users have a nondeterministic behaviour while the protocol follows a probability dis-tribution.

The choice of nondeterminism to characterize the users’ behaviour is motivated by the fact that usually nothing is known about the relative frequency by which each user performs the anonymous actions. Moreover, in several systems, this behaviour may change and be irregular over time. This is particularly relevant in distributed and concurrent systems where there may not be a global notion of time. In this case, the sequence of actions performed by an agent (e.g. a, b, c) may be seen as a different sequence (e.g. a, c, b, b, a, c) by other agents, depending on their relative space-time locations in the system. Nondeterminism may provide a convenient formalization in such contexts.

It has long been discussed whether nondeterminism could be assimilated to a probabilistic behaviour where the probability distribution is unknown, but the general agreement today is that nondeterminism does not follow a probability law whatsoever.

On the other hand, we are interested in anonymity protocols (such as Crowds or the Dining Cryptographers) which rely on random mechanisms to add noise and obfuscate the identity of the users. These strategies can usually be described by a probability law, hence the choice of a probabilistic model for the anonymity protocol.

This approach clearly separates the considerations of the protocol from the as-sumptions on the users, which models effectively the situation occuring in most real systems, where we want to have guarantees on the security of the anonymity protocol whoever the users may be.

Probabilistic Automata An appropriate formal description of this model re-quires therefore the capability to express both nondeterminism and probability. Many models proposed in the literature combine these two approaches, and one of the most general is the formalism of the probabilistic automata proposed by Segala and Lynch [SL95]. In [BP05] the notion of anonymity is formulated in terms of observables for processes in the probabilistic π-calculus, whose semantics is based on probabilistic automata. A function called scheduler is used to resolve nondeterminism, and the problem of the choice of the scheduler will be addressed later in this thesis.

Degrees of anonymity

Anonymity Metrics Several anonymity metrics have already been proposed in the past, which can mainly be classified as metrics based on the anonymity set, informa-tion theoretic entropy metrics and probability-based metrics.

Anonymity set metrics The simplest metrics rely on the anonymity set as de-fined by Chaum for the Dining Cryptographer [Cha88], i.e., the size of the set of users that may have sent a message through the system. The larger the set of potential initiators of the message, the stronger the anonymity of the sender. Therefore, the degree of anonymity was defined directly as the size n of the anonymity set, or as log(n). Unfortunately, these metrics only work when the adversary considers the a priori probability distribution of the possible senders to be uniform, an assumption we will try to relieve in this thesis.

Information theoretic entropy metrics An information theoretic entropy met-ric was then proposed by Danezis and Serjantov in 2002 [SD02a] in order to overcome the limitations of the previous approach and quantify anonymity in case of an arbitrary distribution of potential senders. Here, the uncertainty of an adversary is quantified in terms of Shannon entropy H(S) where S is the probability distribution on the com-munication participants regarding which one is the sender in a communication. Here, we have 0 ≤ H(S) ≤ log n, where H(S) = 0 corresponds to knowledge of the sender (i.e., pi = 1 if the sender is the user i) and H(S) = log n represents strong anonymity (i.e., S is the uniform distribution).

Diaz et al. [DSCP02a] proposed a normalization of this metric by defining the degree of anonymity as H(S)/ log n so that it varies between 0 and 1.

The main drawback of these metrics is their explicit reliance on the knowledge of an adversary, which may complicate the comparison of different real-life anonymity systems. Diaz and Sassaman [DSD04] addressed this issue by statistical analysis, i.e., by calculating for a large volume of traffic data gathered in two anonymity systems the maximum and minimum observed entropies over a long time period.

Probability-based metrics: the anonymity hierarchy The large flexibility given by probabilities compared to possibilistic approaches, already mentioned previously, was also used by several authors to define different degrees of anonymity, thus scal-ing the gap between the least and maximal anonymity that occurs with a possibilistic approach. In the next paragraph, we will see how this approach was used to define the so-called anonymity hierarchy.

The Anonymity Hierarchy Reiter and Rubin were the first in [RR98] to give a hi-erarchy of anonymity degrees, which, as they explain, adds a third aspect to the two other classical properties of an anonymity system, namely the anonymous communi-cation model and the attacker model, both defined in the eighties by Pfitzmann and Waidner [PW87]. An anonymous communication model may be characterized either by sender anonymity, where the identity of the sender is hidden while the receiver and the message may not be, or by receiver anonymity where the identity of the receiver is hidden, or by unlinkability of sender and receiver, where only the communication between sender and receiver is hidden but possibly not their identities. On the other hand, the attacker can be either modelled as an eavesdropper that may observe mes-sages exchanged in the system, or as collaborations of some senders, receivers, and other parties, or variations of these.

In order to complement these two properties, Reiter and Rubin defined a contin-uum of degrees of beliefs, ranging from absolute secrecy (no observable effect for the attacker) to provably exposed (the attacker can identify the sender and prove his identity to other parties). In between these two extrema and in decreasing degree of anonymity, the following three intermediary notions are defined:

• Beyond suspicion: From the attackers point of view, the sender appears no more likely to be the originator of the message than any other potential sender in the system.

• Probable innocence: From the attackers point of view, the sender appears no more likely to be the originator of the message than to not be the originator.

• Possible innocence: From the attackers point of view, there is a nontrivial prob-ability that the real sender is someone else.

Initially, these levels were tailored to Crowds (i.e., they depended in [RR98] on sym-metries inherent to Crowds that do not necessarily occur in a more general system) and it was shown that the degree of anonymity provided by the protocol for the sender is probable innocence.

Strong anonymity Reiter and Rubin’s hierarchy was a first step towards a real quantification of anonymity [RR98]. However, as mentioned previously, it was re-stricted to systems satisfying the assumptions in Crowds. Following work in this field aimed therefore at generalizing the anonymity hierarchy, starting from the definition of strong anonymity.

This notion had already been defined by Chaum who proved that his Dining Cryp-tographer protocol satisfies strong anonymity, under the assumption that the coins are fair [Cha88]. Intuitively, strong anonymity occurs when the observables (here the an-swers of the cryptographers) do not give additional knowledge to the observer on the secret information (here the identity of the payer). This notion of anonymity describes the ideal situation in which the protocol does not leak any information concerning the identity of the user. In [Cha88], it was formulated as the condition p(a|o) = p(a), where a is a secret action and o is an observable.

In the subsequent research, there have been basically two points of view to ex-press strong anonymity. The first one corresponds to Chaum’s definition [Cha88] and expresses “probabilistic noninterference”, i.e., the fact that the attacker does not learn anything about an anonymous action from the execution of the protocol, or in other words that the a priori probability p(a) of an anonymous action a is equal to its a posteriori probability p(a|o) after the observation of o. In [CP05] it was observed that this condition is equivalent to the equality of the likelihoods of all anonymous events: ∀a, a′, o, p(o|a) = p(o|a′) (1.14)

The second point of view, adopted by Halpern and O’Neill [HO03, HO05] con-siders the lack of confidence of the attacker and requires that after an observation o, all the a posteriori probabilities of the anonymous events p(a|o) (where a is an anony-mous action) be equal, so that the attacker cannot have confidence in one hypothesis more than in another. Formally, this means:

∀a, a′, o, p(a|o) = p(a′|o) (1.15)

The notion of strong anonymity was called conditional anonymity by Halpern and O’Neill in [HO03]. It is also equivalent to the anonymity level called beyond suspicion in Reiter and Rubin’s hierarchy [RR98].

It corresponds to the situation in which all anonymous events produce the same observables events with the same probability, which prevents the attacker from de-ducing anything concerning the anonymous events. This definition was adopted by Chatzikokolakis and Palamidessi, because it meets the security goal without relying on the distribution of the anonymous events [CP05].

Chatzikokolakis also studied compositionality of strong anonymity and proved that if S1 and S2 are two anonymity systems, then the composition S1; S2 is an ano-nymity system which satisfies strong anonymity if and only if S1 and S2 satisfy both strong anonymity [Cha07].

Probable Innocence Besides the notion of strong anonymity, a weaker notion is needed to characterize systems in which some leakage is allowed and which could correspond to the (informal) notion of ”probable innocence” in Reiter and Rubin’s hierarchy, defined as the situation in which the probability that the initiator sends the message to an attacker is at most 1/2. Halpern and O’Neill reinterpreted more formally this notion which reflects the quantification of an observer’s uncertainty [HO05], and thus comes close to the recent work of Clarkson et al. previously mentioned with their notion of attacker’s belief [CMS08]. A definition of anonymity in the process algebra CSP is also given in [HO05], as well as definitions of information hiding using function views.

In [CP05], a generalized notion of probable innocence is proposed, which com-bines both approaches, i.e., expresses a limit both on the attackers confidence and on the probability of detection. Moreover, it relaxes the two assumptions that were the main drawbacks of the former definitions: it does not depend on the probability distribution of the anonymous events as Halpern and O’Neill’s approach [HO05] and holds for systems without the symmetries present in Crowds [RR98]. On the other hand, it still reduces to the definition in [HO05] when the anonymous events have a uniform distribution and to the definition in [RR98] when the system is given the same symmetries as in Crowds, as expected from a correct modelization.

Notice that [CP05] contains a proof showing that as expected, the generalized probable innocence remains indeed weaker than strong anonymity (i.e., the latter im-plies the former).

Table of contents :

1 Introduction

1.1 Motivations

1.2 Related Work

1.2.1 Information Flow

1.2.1.1 Qualitative Information Flow

1.2.1.2 Quantitative Information Flow

1.2.1.3 Consideration of the attacker model

1.2.2 Anonymity

1.2.2.1 Definition and Protocols

1.2.2.2 Possibilistic versus probabilistic approaches

1.2.2.3 Degrees of anonymity

1.2.2.4 Anonymity Protocols as Noisy Channel

1.2.3 Belief Logics

1.3 Contribution

1.4 Publications

2 Preliminaries

2.1 Probability spaces

2.2 Probabilistic Automata

2.3 CCS with probabilistic choice

2.3.1 Syntax

2.3.2 Semantics

2.4 R´enyi Entropies, Shannon Entropy, and Mutual Information

2.5 Convexity and Corner Points

3 The process calculus approach

3.1 Introduction

3.2 CCSp with secret and observable actions

3.3 Modeling protocols for information-hiding

3.3.1 Protocols as channels

3.3.2 Process terms as channels

3.4 Inferring the secrets from the observables

3.5 Safe constructs

3.6 A case study: the Dining Cryptographers

3.7 Conclusion and future work

4 The logical approach

4.1 Introduction

4.2 A quantitative doxastic logic and its interpretation in CCSp

4.2.1 Modal operators for belief and truth

4.2.2 Syntax of DμCEC

4.2.3 CCSp revisited

4.2.4 Interpretation of DμCEC

4.2.5 Relation with standard (KD45) belief

4.3 Application: Dining Cryptographers

4.4 Application: Oblivious Transfer

4.4.1 Oblivious Transfer of one bit only

4.4.1.1 Description

4.4.1.2 Specification

4.4.2 The 1-out-of-2 Oblivious Transfer

4.4.2.1 Description

4.4.2.2 Specification

4.4.2.3 Implementation of the OT12 protocol using a publickey cryptosystem

4.4.2.4 Verification

4.5 Conclusion and Future Work

5 Other notions based on Bayesian risk

5.1 Introduction

5.2 Mutual Information and Capacity

5.3 Towards a more suitable notion of leakage

5.3.1 Probabilities of a right guess

5.3.2 Leakage and uncertainty

5.3.3 Multiplicative leakage

5.3.4 Additive leakage

5.4 Properties of the mutiplicative leakage

5.5 Properties of the additive leakage

5.6 Comparison

5.6.1 Comparison on a specific input distribution

5.6.2 Comparison of the worst cases

5.7 Conclusion

6 Conclusion

Bibliography