Get Complete Project Material File(s) Now! »

Integration of the budget constraint during inference:

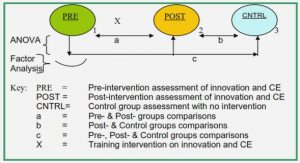

Feature Selection / Feature Acquisition This setting considers the case where, during inference, the inputs have missing features, and most of the time, no given feature beforehand. The system can ask for the features at some cost (uniform or not) to an oracle. However, during training, the features’ examples are often consid-ered to be fully available. The goal is to learn a model able to acquire some features for the smaller possible cost and predict conjointly. We provide further details in the next section. Note that this setting can also be referred to as active classification (e.g in the taxonomy presented in [Settles, 2010]).

We choose in this thesis to focus on two types of costly information: features, and la-bels. More precisely, we propose to tackle the budgeted learning problem by learn-ing acquisition strategies for this specific information. We provide in the next sec-tion a more detailed overview of the methods proposed for feature acquisition. We present the more particular case of meta active learning (i.e learning to acquire the right labels) in Section 2.2.

Cost-sensitive inference : feature selection and acquisition

This Section presents an overview of the models proposed to limit the cost of fea-tures used at test-time (i.e inference), as it is one of the two problems tackled in this thesis, notably in Chapter 3, 4 and 5. This problem can first be divided into two settings: uniform costs vs non-uniform costs of features. The first setting implies that each feature costs the same « price ». The goal resumes to reduce the number of features necessary to provide an accurate prediction. The second setting aims at integrating different costs between the features (e.g a medical test like blood sam-pling is cheaper than conducting an fMRI). Obviously, methods able to handle non-uniform costs can be straightforwardly used on tasks with uniform costs, making these methods more generic.

We also distinguish in the following two families of approaches: static methods (often referred to the problem of feature selection) vs adaptive models (feature acquisi-tion).

Recurrent Neural Networks Architectures

Several methods that are presented in this manuscript share a common idea with the (visual) attention models presented in Section 2.1.2 : the key idea is to design systems that acquire information on an input (a data-point) sequentially, in several steps. We chose to use recurrent architectures as well to build such systems in Chap-ters4, 5 and 6. We present in this section a description of various recurrent neural networks architectures designed for particular problems and inputs.

Neural networks systems for machine learning have known several evolutions since the perceptron in the late 50’s (Rosenblatt, 1958). Notably, the integration of non-linear functions, the multiplication of layers and the development of the back-propagation algorithm (Werbos, 1974; Rumelhart, Hinton, and Williams, 1985), have helped to obtain better and better results with these systems. The design of particular functions for image processing (e.g convolutional layers [Fukushima and Miyake, 1982; LeCun et al., 1998]) and more recently the general growth of deep learning (Salakhutdinov, Mnih, and Hinton, 2007) have known in the last decade a pick of popularization, notably due to the good performance achieved on various challeng-ing tasks from image classification, object recognition, to game playing and natural language processing.

Recurrent neural network (RNN) is a specific type of neural networks, commonly used to handle sequential inputs or sequential problem such as speech recognition (Sak, Senior, and Beaufays, 2014), handwriting recognition (Graves et al., 2009), or language translation (Sutskever, Vinyals, and Le, 2014). The main particularity of RNN is that they contain cyclic connections, based on recurrent weights (dupli-cated weights, see Figure 2.5a). This allows these architectures to build an internal state with memory ability, through a feedback loop. These systems are intrinsically dynamical, and their flexibility allows a variety of application setting. We present in this thesis several models that rely on these type of architectures to design se-quential, adaptive and interactive systems for our budgeted tasks. This section thus proposes a non-exhaustive technical review on recurrent neural networks. We first define the various settings RNN can be adapted to. We then provide details on the more specific architectures Long-Short Term Memory (LSTM), memory network, bi-directional RNN, and attention RNN.

Overview of the various settings

As we mentioned, a recurrent network is based on cyclic connections on their inter-nal state. The very basic architecture can be illustrated as in the Figure 2.5a, where an input x is integrated to the current hidden state s with weights U. This state is « updated » sequentially w.r.t. its previous value with an update function of weights W . Prediction is made based on the current state through function of weights V . If both x and y are non-sequential, this resume to a vanilla feed-forward network, as the « feedback loop » W is not used. Generally speaking, we can thus compute the update state st at step t as follow: st = gK (fW ((st 1); fU (xt)).

Different specific RNN architectures

These various settings can thus be implemented in a « vanilla » fashion, with single layer hidden state and non-linear function such as hyperbolic tangent, but more complex layers can be integrated, such as convolutional ones. However, as it is, RNN architectures suffer from one major drawback with the problem of vanishing gradient. Indeed, for long sequences, and more particularly if the supervision is not done at each step of the sequence, the back-propagated gradient will become smaller and smaller going back through the time steps. As a consequence, the net-works have a limited memory through time. We present in this section several types of architectures that try to overcome these limitations, first with specific « gated » cells, then with specific approaches that integrate an explicit memory in the net-work. Afterward, we present two techniques that aim at overcoming the « myopic » hard sequential aspect of RNN, as it considers inputs as ordered sequences and usually can not integrate « future » knowledge as it is considered « unseen ».

Long-Short Term Memory and Gated Recurrent Unit

Long-Short Term Memory (LSTM) 7 is an architecture for RNN that has been pro-posed in [Hochreiter and Schmidhuber, 1997]. It has known in the recent years various success on several tasks on sequential data, and various variations have been proposed (see [Greff et al., 2016] for an overview). The key idea that moti-vated the LSTM design is to have one part of the cell which can keep its state over time (memory cell), and one part that update/forget depending on the incoming information (gating unit). Figure 2.7a illustrates this specific cell. It contains three (or four) gates which control how the information « flows » in or out of the memory. The « forget » gate typically allows to « start afresh », by forgetting the previously seen inputs. The « input » gate allows integrating more or less information depending on what is observed. The Gated Recurrent Unit (Cho et al., 2014a; Cho et al., 2014b) is close to LSTM, as it also relies on gates that enable « forgetting », and on additive functions for the internal state. The use of additive function implies that the internal state is not « squashed » at each step and thus allows the gradient to flow through the steps when back-propagating. This, therefore, prevents from the vanishing gradient problem mentioned earlier.

Related work on recommender systems and cold start

The recommendation problem has been intensively studied in the recent years, more particularly since the Netflix challenge in 2009 (Bennett and Lanning, 2007). The overall goal is to suggest the most relevant items (movies, videos, advertise-ments, products) so that the user will watch, listen, click or buy the item. The set-ting can, therefore, vary a lot between one application to another. Indeed, some systems, such as imdb or amazon, can access ratings of users on some items (e.g in Figure 3.1), while others will have more implicit data like sequence of songs listened to. We propose to focus our approach on cases where explicit feedback (e.g ratings) is obtained on items. In such setting, the recommendation problem is often seen as accurately predicting the ratings given by each user on the items. Then, the recommendation is done by suggesting the item with the highest predicted score. Thus, distance prediction error (RMSE) is generally used in this case. However this topic has been widely discussed, and several other performance measures can be used, such as top-K.

Table of contents :

Acknowledgements

Abstract

1 Introduction

1.1 Classical supervised machine learning

1.2 Budgeted learning

1.3 Thesis structure

1.3.1 Chapter 3 : Static Feature Selection

1.3.2 Chapters 4 and 5 : Adaptive Feature acquisition

1.3.3 Chapter 6 : Meta Active Learning

1.3.4 Other contributions

2 Supervised learning under budget constraints: Background

2.1 Budgeted Learning

2.1.1 An overview of the different types of cost

Taxonomy of budgeted learning methods

Integration of budget constraint during training

Integration of the budget constraint during inference:

2.1.2 Cost-sensitive inference : feature selection and acquisition

Static methods : feature selection

Adaptive methods: feature acquisition

2.2 Meta Active Learning

2.2.1 Active learning

2.2.2 Meta learning

2.2.3 One-shot learning

2.2.4 Meta Active Learning

2.3 Recurrent Neural Networks Architectures

2.3.1 Overview of the various settings

2.3.2 Different specific RNN architectures

Long-Short Term Memory and Gated Recurrent Unit

Memory Networks

Bidirectional RNN

Order-invariant RNN

2.4 Closing remarks

3 Feature Selection – Cold-start application in Recommender Systems

3.1 Introduction

3.2 Related work on recommender systems and cold start

3.2.1 Collaborative Filtering

3.2.2 Cold-start recommendation

3.3 Formulation of representation learning problem for user cold start

3.3.1 Inductive Additive Model (IAM)

Continuous Learning Problem

Cold-Start IAM (CS-IAM) Learning Algorithm

3.3.2 IAM and classical warm collaborative filtering

3.3.3 IAM from cold-start to warm collaborative filtering

3.4 Experiments

3.4.1 Experimental protocol

3.4.2 Results

Collaborative Filtering

Cold-start Setting

Mixing Cold-start andWarm Recommendation

3.5 Closing remarks

4 Adaptive cost-sensitive feature acquisition – Recurrent Neural Network Approach

4.1 Introduction

4.2 Adaptive feature acquisition with a recurrent neural network architecture

4.2.1 Definition of the problem

4.2.2 Generic aspects of the model

4.2.3 Recurrent ADaptive AcquisitIon Network (RADIN)

Components of RADIN

Loss and learning

Inference

4.3 Experiments

4.3.1 Experimental protocol

4.3.2 Results

Illustration of the adaptive acquisition process

Uniform cost

Cost-sensitive setting

4.4 Closing remarks

5 Adaptive cost-sensitive feature acquisition – Stochastic Approach

5.1 Introduction

5.2 Related work

5.2.1 Reinforcement learning

5.2.2 Policy Gradient

Formalization of the problem

Computing the gradient

Using policy gradient with RNN

5.3 Definition of the stochastic model

5.3.1 Cost-sensitive Learning problem with stochastic acquisition method

5.3.2 Gradient computation

5.4 REpresentation-based Acquisition Models (REAMs)

5.4.1 Instances of REAM

5.5 Experimental results

5.5.1 Feature Selection Problem

5.5.2 Cost-sensitive setting

5.5.3 Comparison of the learning complexity

5.6 Closing remarks

6 Meta Active Learning

6.1 Introduction

6.2 Definition of setting

6.3 One-step acquisition model

6.4 Experiments

6.5 Perspective : Hybrid Model

7 Conclusion and Closing Remarks

7.1 Future directions

Bibliography