Get Complete Project Material File(s) Now! »

Fully automated volumetry software

The NeuroreaderTM software (http://www.brainreader.net) is a commercial clin-ical brain image analysis tool (Ahdidan et al., 2017). The system provides the vol-umes of the following structures: intracranial cavity, tissue categories (WM, GM, and CSF), subcortical GM structures (putamen, caudate, pallidum, thalamus, hip-pocampus, amygdala and accumbens) and lobes (occipital, parietal, frontal and temporal). Processing times range from 3 to 7 minutes as a function of image size, irrespective of magnetic field strength.

The volBrain software (http://volBrain.upv.es) is an online freely-available academic brain image analysis tool (Manjon and Coupé, 2015). The volBrain sys-tem takes around 15 minutes to perform the full analysis and provides the same volumes as NeuroreaderTM except for the lobar volumes, only provided by Neuro-readerTM. However, the volBrain system provides hemisphere, brainstem and cerebellum segmentations which were not used in this study.

Preprocessing: extraction of whole gray matter maps

All T1-weighted MRI images were segmented into gray matter (GM), white mat-ter (WM) and CSF tissue maps using the Statistical Parametric Mapping unified segmentation routine with the default parameters (SPM12, London, UK1) (Ash-burner and Friston, 2000). A population template was calculated from GM and WM tissue maps using the DARTEL diffeomorphic registration algorithm with the default parameters (Ashburner, 2007). The obtained transformations and a spatial normalization were applied to the GM tissue maps. All maps were modulated to ensure that the overall tissue amount remains constant and normalized to MNI space. 12 mm smoothing was applied as the classification performed better with this parameter than with none or less smoothed images.

SVM classification

Whole gray matter (WGM) maps were then used as input of a high-dimensional classifier, based on a linear support vector machine (SVM) classifier. In brief, the linear SVM looks for a hyperplane which best separates two given groups of pa-tients, in a very high dimensional space composed of all voxel values. In such approach, the machine learning algorithm automatically learns the spatial pat-tern (set of voxels and their weights) allowing the discrimination of diagnostic groups. Importantly, the classifier does not use prior information such as anatom-ical boundaries between structures or a specific anatomical structure (e.g. hip-pocampus) that would be affected in a given condition. Please refer to (Cuingnet et al., 2011) for more details.

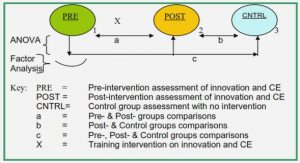

SVM classification was performed for each possible pair of diagnostic groups (e.g. EOAD vs FTD, LOAD vs FTD, etc.). The performance measure was the bal-anced diagnostic accuracy defined as (sensitivity specificity)/2. Unlike stan-dard accuracy, balanced accuracy allows the objective comparison of the perfor-mance of different classification tasks, even in the presence of unbalanced groups (Cuingnet et al., 2011).

In order to compute unbiased estimates of classification performances, we used a 10-fold cross validation, meaning that each 10% of the set is used for testing and the other 90% for training, changing the groups in each out of the ten trials. This ensures that the patient that is currently being classified has not been used to train the classifier, a problem known as “double-dipping”. Finally, the SVM classifier has one hyper-parameter to optimize. The optimization was done using a grid-search. Again, in order to have a fully unbiased evaluation, the hyper-parameter tuning was done using a second, nested, 10-fold cross-validation procedure.

Finally, in order to have a fair comparison between WGM maps and AVS vol-umes, we also performed SVM classification using volumes of each AVS as input, all regional volumes (for a given AVS) being simultaneously used in a multivariate manner.

Radiological classification

Two neuroradiologists (AB, with 8 years of experience, and SS, with 4 years of experience), specialized in the evaluation of dementia, performed a visual classifi-cation of three diagnosis pairs on the same dataset: FTD vs EOAD, depression vs LOAD and LBD vs LOAD. We chose FTD vs EOAD and depression vs LOAD for their relevance in clinical practice. We chose LBD vs LOAD because the SVM clas-sifier yielded only moderate accuracies, and because the diagnosis of LBD based on MRI is difficult. The neuroradiologists were blind to all patient data except MRI.

Automatic SVM classification from AVS volumes

To fully compare AVS with our SVM-WGM classification, we provide, in Fig-ure 2.4, results of SVM classification from all volumes obtained with volBrain and NeuroreaderTM in addition to SVM based on WGM. In general, results were slightly lower than with SVM classification from WGM. Overall, volBrain and NeuroreaderTM performed similarly, even though one or the other tool achieved slightly higher performances in some specific cases.

Conversion of the ADNI dataset to BIDS

The ADNI to BIDS converter requires the user to have downloaded all the ADNI study data (tabular data in csv format) and the imaging data of interest. Note that the downloaded files must be kept exactly as they were downloaded. The following steps are performed by the automatic converter (no user intervention is required). To convert the imaging data to BIDS, a list of subjects with their sessions is first obtained from the ADNIMERGE spreadsheet. This list is compared for each modality of interest to the list of scans available, as provided by modality-specific csv files (e.g. MRILIST.csv). If the modality was acquired for a specific pair of subject-session, and several scans and/or preprocessed images are available, only one is converted. Regarding the T1 scans, when several are available for a sin-gle session, the preferred scan (as identified in MAYOADIRL_MRI_IMAGEQC_ 12_08_15.csv) is chosen. If a preferred scan is not specified then the higher quality scan (as defined in MRIQUALITY.csv) is selected. If no quality control is found, then we choose the first scan. Gradwarp and B1-inhomogeneity corrected im-ages are selected when available as these corrections can be performed in a clinical setting, otherwise the original image is selected. 1.5 T images are preferred for ADNI 1 since they are available for a larger number of patients. Regarding the FDG PET scans, the images co-registered and averaged across time frames are se-lected. The scans failing quality control (if specified in PETQC.csv) are discarded. Note that AV45 PET scans are also converted, though not used in the experiments. Once the images of interest have been selected and the paths to the image files identified, the imaging data can be converted to BIDS. When in dicom format, the images are first converted to nifti using the dcm2niix tool, or in case of error the dcm2nii tool (Li et al., 2016). The BIDS folder structure is generated by creat-ing a subfolder for each of the subjects. A session folder is created inside each of the subject subfolders, and a modality folder is created inside each of the session subfolders. Finally, each image in nifti is copied to the appropriate folder and re-named to follow the BIDS specifications. Clinical data are also converted to BIDS. Data that do not change over time, such as the subject’s sex, education level or diagnosis at baseline, are obtained from the ADNIMERGE spreadsheet and gath-ered in the participants.tsv file, located at the top of the BIDS folder hierarchy. The session-dependent data, such as the clinical scores, are obtained from specific csv files (e.g. MMSE.csv) and gathered in <subjectID>_session.tsv files in each par-ticipant subfolder. The clinical data being converted are defined in a spreadsheet (clinical_specifications_adni.xlsx) that is available with the code of the converter

Conversion of the AIBL dataset to BIDS

The AIBL to BIDS converter requires the user to have downloaded the AIBL non-imaging data (tabular data in csv format) and the imaging data of interest. The conversion of the imaging data to BIDS relies on modality-specific csv files that provide the list of scans available. For each AIBL participant, the only T1w MR image available per session is converted. Note that even though they are not used in this work, we also convert the Florbetapir, PiB and Flutemetamol PET images (only one image per tracer is available for each session). Once the images of in-terest have been selected and the paths to the image files identified, the imaging data are converted to BIDS following the same steps as described in the above sec-tion. The conversion of the clinical data relies on the list of subjects and sessions obtained after the conversion of the imaging data and on the csv files containing the non-imaging data. Data that do not change over time are gathered in the par-ticipants.tsv file, located at the top of the BIDS folder hierarchy, while the session-dependent data are gathered in <subjectID>_session.tsv files in each participant subfolder. As for the ADNI converter, the clinical data being converted are de-fined in a spreadsheet (clinical_specifications.xlsx) available with the code of the converter, which the user can modify.

Conversion of the OASIS dataset to BIDS

The OASIS to BIDS converter requires the user to have downloaded the OASIS-1 imaging data and the associated csv file. To convert the imaging data to BIDS, the list of subjects is obtained from the downloaded folders. For each subject, among the multiple T1w MR images available, we select the average of the motion-corrected co-registered individual images resampled to 1 mm isotropic voxels, lo-cated in the SUBJ_111 subfolder. Once the paths to the image files have been iden-tified, the images in Analyse format are converted to nifti using the mri_convert tool of FreeSurfer (Fischl, 2012), the BIDS folder hierarchy is created, and the im-ages are copied to the appropriate folder and renamed. The clinical data are con-verted using the list of subjects obtained after the conversion of the imaging data and the csv file containing the non-imaging data, as described in the previous sec-tion.

Preprocessing of T1-weighted MR images

For anatomical T1w MRI, the preprocessing pipeline was based on SPM127. First, the Unified Segmentation procedure (Ashburner and Friston, 2005) is used to si-multaneously perform tissue segmentation, bias correction and spatial normaliza-tion of the input image. Next, a group template is created using DARTEL, an algo-rithm for diffeomorphic image registration (Ashburner, 2007), from the subjects’ tissue probability maps on the native space, usually GM, WM and CSF tissues, ob-tained at the previous step. Here, not only the group template is obtained, but also the deformation fields from each subject’s native space into the DARTEL template space. Lastly, the DARTEL to MNI method (Ashburner, 2007) is applied, providing a registration of the native space images into the MNI space: for a given subject its flow field into the DARTEL template is combined with the transformation of the DARTEL template into MNI space, and the resulting transformation is applied to the subject’s different tissue maps. As a result, all the images are in a common space, providing a voxel-wise correspondence across subjects.

Preprocessing of PET images

The PET preprocessing pipeline relies on SPM12 and on the PETPVC8 tool for par-tial volume correction (PVC) (Thomas et al., 2016). We assume that each PET im-age has a corresponding T1w image that has been preprocessed using the pipeline described above. The first step is to perform a registration of the PET image to the corresponding T1w image in native space using the Co-register method of SPM (Friston et al., 1995). An optional PVC step with the regional voxel-based (RBV) method (Thomas et al., 2011) can be performed using as input regions the differ-ent tissue maps from the T1w in native space. Then, the PET image is registered into MNI space using the same transformation as for the corresponding T1w (the DARTEL to MNI method is used). The PET image in MNI space is then inten-sity normalized according to a reference region (eroded pons for FDG PET) and we obtain a standardized uptake value ratio (SUVR) map. Finally, we mask the non-brain regions using a binary mask resulting from thresholding the sum of the GM, WM and CSF tissue probability maps for the subject in MNI space. The re-sulting masked SUVR images are also in a common space and provide voxel-wise correspondence across subjects.

Table of contents :

Abstract

Résumé

Scientific production

Contents

List of Figures

List of Tables

List of Abbreviations

Introduction

1 Machine learning from neuroimaging data to assist the diagnosis of Alzheimer’s disease

1.1 Alzheimer’s disease

1.2 Interest of ML for identification of AD

1.3 Modalities involved in AD diagnosis

1.3.1 Mono-modal approaches

1.3.1.1 Anatomical MRI

1.3.1.2 PET

1.3.1.3 Diffusion MRI

1.3.1.4 Functional MRI

1.3.1.5 Non-imaging modalities

1.3.2 Multimodal approaches

1.3.2.1 Anatomical MRI and FDG PET

1.3.2.2 Other combinations

1.3.2.3 Combination with non-imaging modalities

1.4 Features

1.4.1 Voxel-based features

1.4.2 Regional features

1.4.3 Graph features

1.5 Dimensionality reduction

1.5.1 Feature selection

1.5.1.1 Univariate feature selection

1.5.1.2 Multivariate feature selection

1.5.2 Feature transformation

1.6 Learning approaches

1.6.1 Logistic regression

1.6.2 Support vector machine

1.6.3 Ensemble learning

1.6.4 Deep neural networks

1.6.5 Patch-based grading

1.6.6 Multimodality approaches

1.7 Validation

1.7.1 Cross-validation

1.7.2 Performance metrics

1.8 Datasets

1.9 Conclusion

2 Accuracy of MRI classification algorithms in a tertiary memory center clinical routine cohort

2.1 Abstract

2.2 Introduction

2.3 Material and Methods

2.3.1 Participants

2.3.2 MRI acquisition

2.3.3 Fully automated volumetry software

2.3.4 Automatic classification using SVM

2.3.4.1 Preprocessing: extraction of whole gray matter maps

2.3.4.2 SVM classification

2.3.5 Radiological classification

2.4 Results

2.4.1 Automated segmentation software

2.4.2 Automatic SVM classification from whole-brain gray matter maps

2.4.3 Automatic SVM classification from AVS volumes

2.4.4 Radiological classification

2.5 Discussion

2.6 Conclusion

2.7 Supplementary material

3 Reproducible evaluation of classification methods in Alzheimer’s disease: framework and application to MRI and PET data

3.1 Abstract

3.2 Introduction

3.3 Materials

3.3.1 Datasets

3.3.2 Participants

3.3.2.1 ADNI

3.3.2.2 AIBL

3.3.2.3 OASIS

3.3.3 Imaging data

3.3.3.1 ADNI

3.3.3.2 AIBL

3.3.3.3 OASIS

3.4 Methods

3.4.1 Converting datasets to a standardized data structure

3.4.1.1 Conversion of the ADNI dataset to BIDS

3.4.1.2 Conversion of the AIBL dataset to BIDS

3.4.1.3 Conversion of the OASIS dataset to BIDS

3.4.2 Preprocessing pipelines

3.4.2.1 Preprocessing of T1-weighted MR images

3.4.2.2 Preprocessing of PET images

3.4.3 Feature extraction

3.4.4 Classification models

3.4.4.1 Linear SVM

3.4.4.2 Logistic regression with L2 regularization

3.4.4.3 Random forest

3.4.5 Evaluation strategy

3.4.5.1 Cross-validation

3.4.5.2 Metrics

3.4.6 Classification experiments

3.5 Results

3.5.1 Influence of the atlas

3.5.2 Influence of the smoothing

3.5.3 Influence of the type of features

3.5.4 Influence of the classification method

3.5.5 Influence of the partial volume correction of PET images

3.5.6 Influence of the magnetic field strength

3.5.7 Influence of the class imbalance

3.5.8 Influence of the dataset

3.5.9 Influence of the training dataset size

3.5.10 Influence of the diagnostic criteria

3.5.11 Computation time

3.6 Discussion

3.7 Conclusions

4 Reproducible evaluation of methods for predicting progression to Alzheimer’s disease from clinical and neuroimaging data

4.1 Introduction

4.2 Materials and methods

4.2.1 Data

4.2.2 Data conversion

4.2.3 Preprocessing and feature extraction

4.2.4 Age correction

4.2.5 Classification approaches

4.2.5.1 Classification using clinical data

4.2.5.2 Image-based classification

4.2.5.3 Integrating clinical and imaging data

4.2.5.4 Integrating amyloid status

4.2.5.5 Prediction at different time-points

4.2.6 Validation

4.3 Results

4.3.1 Classification using clinical data

4.3.2 Integration of imaging and clinical data

4.3.3 Integration of amyloid status

4.3.4 Prediction at different time-points

4.4 Conclusions

Conclusion & Perspectives

A Reproducible evaluation of diffusion MRI features for automatic classification of patients with Alzheimer’s disease

Bibliography