(Downloads - 0)

For more info about our services contact : help@bestpfe.com

Table of contents

1 Introduction

1.1 More and more multimedia data

1.2 The need to organize

1.3 Examples of applications

1.4 Context, goals and contributions of this thesis

2 State of the art

2.1 Generalities about Content-Based Video Retrieval

2.2 General framework for semantic indexing

2.3 Descriptors for video content

2.3.1 Color descriptors

2.3.2 Texture descriptors

2.3.3 Audio descriptors

2.3.4 Bag of Words descriptors based on local features

2.3.5 Descriptors for action recognition

2.4 Information fusion strategies

2.5 Proposed improvements

2.6 Standard datasets for concept detection

2.6.1 The KTH human action dataset

2.6.2 The Hollywood 2 human actions and scenes dataset

2.6.3 The TRECVid challenge: Semantic Indexing task

3 Retinal preprocessing for SIFT/SURF-BoWrepresentations

3.1 Behaviour of the human retina model

3.1.1 The parvocellular channel

3.1.2 The magnocellular channel

3.1.3 Area of interest segmentation

3.2 Proposed SIFT/SURF retina-enhanced descriptors

3.2.1 Keyframe based descriptors

3.2.2 Temporal window based descriptors with salient area masking

3.3 Experiments

3.3.1 Preliminary experiments with OpponentSURF

3.3.2 Experiments with OpponentSIFT

3.4 Conclusions

4 Trajectory-based BoW descriptors

4.1 Functioning

4.1.1 Choice of points to track

4.1.2 Tracking strategy

4.1.3 Camera motion estimation

4.1.4 Replenishing the set of tracked points

4.1.5 Trajectory selection and trimming

4.1.6 Trajectory descriptors

4.1.7 Integration into the BoW framework

4.2 Preliminary experiments on the KTH dataset

4.2.1 Experimental setup

4.2.2 Results

4.2.3 Conclusions

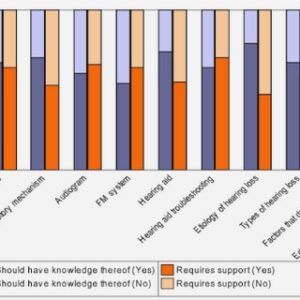

4.3 Experiments on TRECVid

4.3.1 Experimental setup

4.3.2 Differential descriptors

4.3.3 Results

4.3.4 Conclusions

4.4 Global conclusion on trajectories

5 Late fusion of classification scores

5.1 Introduction

5.2 Choice of late fusion strategy

5.3 Proposed late fusion approach

5.3.1 Agglomerative clustering of experts

5.3.2 AdaBoost score-based fusion

5.3.3 AdaBoost rank-based fusion

5.3.4 Weighted average of experts

5.3.5 Best expert per concept

5.3.6 Combining fusions

5.3.7 Improvements: higher-level fusions

5.4 Experiments

5.4.1 Fusion of retina and trajectory experts

5.4.2 Fusion of diverse IRIM experts

5.5 Conclusion

6 Conclusions and perspectives

6.1 A retrospective of contributions

6.1.1 Retina-enhanced SIFT BoW descriptors

6.1.2 Trajectory BoW descriptors

6.1.3 Late fusion of experts

6.2 Perspectives for future research

7 Résumé

7.1 Introduction

7.1.1 L’explosion multimédia

7.1.2 La nécessité d’organiser

7.1.3 Contexte des travaux et contribution

7.2 Etat de l’art

7.2.1 La base vidéo TRECVid

7.2.2 La chaîne de traitement

7.2.3 Descripteurs pour le contenu vidéo

7.2.4 Stratégies de fusion tardive

7.2.5 Améliorations proposées

7.3 Pré-traitement rétinien pour descripteurs SIFT/SURF BoW

7.3.1 Le modèle rétinien

7.3.2 Descripteurs SIFT/SURF BoW améliorés proposés

7.3.3 Validation sur la base TRECVid 2012

7.3.4 Conclusions

7.4 Descripteurs Sac-de-Mots de trajectoires

7.4.1 Principe

7.4.2 Descripteurs de trajectoire

7.4.3 Validation sur la base KTH

7.4.4 Expérimentations sur la base TRECVid SIN 2012

7.4.5 Conclusion

7.5 Fusion tardive de scores de classification

7.5.1 Principes

7.5.2 Résultats sur la base TRECVid 2013

7.5.3 Conclusion concernant la fusion

7.6 Conclusions et perspecti