(Downloads - 0)

For more info about our services contact : help@bestpfe.com

Table of contents

I Background

1 Machine learning with neural networks and mean-field approximations (Context and motivation)

1.1 Neural networks for machine learning

1.1.1 Supervised learning

1.1.2 Unsupervised learning

1.2 A brief history of mean-field methods for neural networks

2 Statistical inference and statistical physics (Fundamental theoretical frameworks)

2.1 Statistical inference

2.1.1 Statistical models representations

2.1.2 Some inference questions in neural networks for machine learning

2.1.3 Challenges in inference

2.2 Statistical physics of disordered systems

3 Selected overview of mean-field treatments: free energies and algorithms (Techniques)

3.1 Naive mean-field

3.1.1 Variational derivation

3.1.2 When does naive mean-field hold true?

3.2 Thouless Anderson and Palmer equations

3.2.1 Outline of the derivation

3.2.2 Illustration on the Boltzmann machine and important remarks

3.2.3 Generalizing the Georges-Yedidia expansion

3.3 Belief propagation and approximate message passing

3.3.1 Generalized linear model

3.3.2 Belief Propagation

3.3.3 (Generalized) approximate message passing

3.4 Replica method

3.4.1 Steps of a replica computation

3.4.2 Assumptions and relation to other mean-field methods

3.5 Extensions of interest for this thesis

3.5.1 Streaming AMP for online learning

3.5.2 A special special case of GAMP: Cal-AMP

3.5.3 Algorithms and free energies beyond i.i.d. matrices

3.5.4 Model composition

II Contributions

4 Mean-field inference for (deep) unsupervised learning

4.1 Mean-field inference in Boltzmann machines

4.1.1 Georges-Yedidia expansion for binary Boltzmann machines

4.1.2 Georges-Yedidia expansion for generalized Boltzmann machines

4.1.3 Application to RBMs and DBMs

4.1.4 Adaptive-TAP fixed points for binary BM

4.2 Applications to Boltzmann machine learning with hidden units

4.2.1 Mean-field likelihood and deterministic training

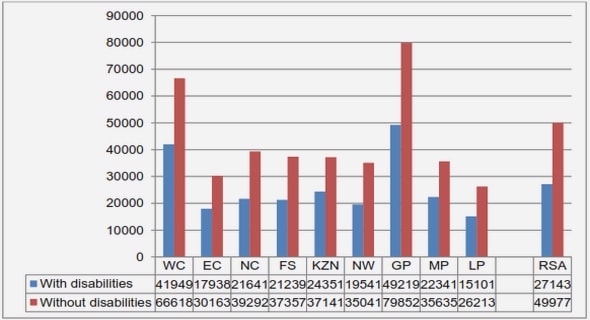

4.2.2 Numerical experiments

4.3 Application to Bayesian reconstruction

4.3.1 Combining CS and RBM inference

4.3.2 Numerical experiments

4.4 Perspectives

5 Mean-field inference for information theory in deep supervised learning

5.1 Mean-field entropy for multi-layer models

5.1.1 Extension of the replica formula to multi-layer networks

5.1.2 Comparison with non-parametric entropy estimators

5.2 Mean-field information trajectories over training of deep networks

5.2.1 Tractable deep learning models

5.2.2 Training experiments

5.3 Further investigation of the information theoretic analysis of deep learning

5.3.1 Mutual information in noisy trainings

5.3.2 Discussion

6 Towards a model for deep Bayesian (online) learning

6.1 Cal-AMP revisited

6.1.1 Derivation through AMP on vector variables

6.1.2 State Evolution for Cal-AMP

6.1.3 Online algorithm and analysis

6.2 Experimental validation on gain calibration

6.2.1 Setting and update functions

6.2.2 Offline results

6.2.3 Online results

6.3 Matrix factorization model and multi-layer networks

6.3.1 Constrained matrix factorization

6.3.2 Multi-layer vectorized AMP

6.3.3 AMP for constrained matrix factorization

6.4 Towards the analysis of learning in multi-layer neural networks

Conclusion and outlook

Appendix

A Vector Approximate Message Passing for the GLM

B Update functions for constrained matrix factorization

Bibliography