Get Complete Project Material File(s) Now! »

Reference frame manipulations

Spatial navigation is an active process that requires the accurate and dynamic representation of our location, which is given by the combination of both external (allothetic) and self-motion (idiothetic) cues. Allothetic or idiothetic labelling characterizes the type of information it conveyed. On the other hand, reference frames characterize the way this information is represented by the navigator. A reference frame defines the framework in which spatial information (e.g., the position of an object) can be represented relative to an origin point. Depending on the anchorage of the origin of the reference coordinate system, the same information can be encoded egocentrically or allocentrically. If the reference frame is centered on the subject (e.g., on a body part such as the head) the representation is said egocentric. If the origin of the framework is a fixed point of the environment (e.g., a corner of the room), the representation is called allocentric. As shown in Figure 5a, the same allothetic spatial information (e.g., the position of a visual cue in the environment) can be represented either egocentrically (e.g., relative to the body of the navigator) or allocentrically (e.g., relative to the room corner). Likewise, as shown in Figure 5b, idiothetic signals (e.g., vestibular information) can be employed to describe self-motion information either egocentrically or relative to an allocentric reference frame. Egocentric to allocentric reference frame bidirectional transitions are necessary to representation of space or to produce body movements egocentrically coded in an allocentric reference frame (e.g. “I have to turn right when I get to the post office”).

Head-to-body and head-to-world transformations

Vestibular information is first detected in the inner ear by the otoliths organs for the linear component and by the semi-circular canals for the rotational component. As receptor cells are fixed to the cranial bone, vestibular signals are detected in a head reference frame. This means for example that based on semicircular signals only, a rotation of the head upright relative to the vertical axis cannot be distinguished from a rotation of the head horizontal relative to the horizontal axis (Figure 6). In other words, semicircular canal information alone does not discriminate vertical or horizontal body position. To compute the movement of the body in space, vestibular information needs to be integrated relative both to the body (taking into account the relative position of the head and the body, given by the neck curvature) and to the world, converting the signal initially in head-fixed coordinates into a signal in world-frame coordinates (taking into account gravity) (Rochefort et al., 2013). These computations are not necessarily successive and result from the integration of different types of signals. Several recent studies showed that these two reference frame transformations occur in different cerebellar subregions.

Translation between whole-body egocentric and allocentric reference frames

Egocentric to allocentric reference frames manipulation consists in transforming information coded in egocentric coordinates into an observer-independent representation.

The retrosplenial cortex is thought to be involved in the allocentric-to-egocentric and egocentric-to-allocentric transformation processes (Burgess et al., 2001a; Byrne et al., 2007; Vann et al., 2009). Retrosplenial inactivation has been shown to impair spatial memory in rodents (for review see (Troy Harker & Whishaw, 2004)) but the magnitude of spatial deficits are smaller than the magnitude of the deficits associated with hippocampal damage. The full impact of retrosplenial cortex lesions appears when animals are forced to shift modes of spatial learning, for example from dark to light (Cooper & Mizumori, 1999) or from local to distal cues (Vann & Aggleton, 2004; Pothuizen et al., 2008).

In humans, a functional imaging study specifically identified the retrosplenial as being involved in acquiring allocentric knowledge of an environment from ground-level observations (Wolbers & Buchel, 2005). Subjects repeatedly encountered a virtual environment composed of intersections and shops and were then tested on their knowledge of the topographical organization of the environment. The retrosplenial cortex was the only structure to parallel behavioural measures of map expertise of the subjects. In another study, Spiers and Maguire (2006) scanned participants navigating through a realistic virtual-reality simulation of central London to determine when during navigation different brain areas are engaged. Activity in the retrosplenial increased specifically when new topographical information was acquired or when topographical representations needed to be updated, integrated or manipulated for route planning (Spiers & Maguire, 2006). This could relate to the proposal that the retrosplenial acts as a short-term buffer for translating between egocentric and allocentric representations (Burgess et al., 2001a; Byrne et al., 2007).

Place information: element of the mental representation of space

This section will describe the properties of three types of cells: head direction cells, grid cells and place cells. These cells were all discovered in rats but two of them have since been identified in humans. In 2003, Ekstrom et al. recorded from electrodes implanted in several regions as participants, epileptic patients, navigated in a virtual town. The authors found cells that could be confidently classified as place cells, amongst which the majority was found in the hippocampal region (Ekstrom et al., 2003). Grid cells have also been found in the entorhinal cortex and in the cingulate cortex using functional imaging (Doeller et al., 2010) and electrophysiological recordings (Jacobs et al., 2013).

Table of contents :

FOREWORD

INTRODUCTION

PART I Navigation and Mental representation of space

1. Mental representation of space

1.1. Tolman’s “Cognitive maps”

1.2. The discovery of hippocampal place cells, substrate for Tolman’s cognitive map

1.3. From cognitive maps to mental representations of space

2. Building a mental representation of space – processes and neural substrates

2.1. Multi-modal information integration

2.1.1. Allothetic information

2.1.2. Idiothetic information

2.2. Reference frame manipulations

2.2.1. Head-to-body and head-to-world transformations

2.2.2. Translation between whole-body egocentric and allocentric reference frames

2.3. Place information: element of the mental representation of space

2.3.1. Head-direction cells

2.3.2. Grid cells

2.3.3. Boundary cells

2.3.4. Identifying a place: hippocampal place cells

2.3.5. Recognizing a place: pattern completion, pattern separation

3. Using the mental representation of space – processes and neural substrates

3.1. Goal information

3.2. Planning & Decision-making

3.3. Organization of action sequences

PART II Neural Dynamics across Task Learning

1. Learning stages

2. Network dynamics across learning

2.1. Imaging results

2.2. Theoretical models

3. Learning stages in rodent studies

3.1. Neural dynamics in goal-directed and habitual behaviours

3.2. Neural dynamics in spatial learning

PART III From mental representations of space to spatial memory

1. Different forms of memory

1.1. Sensory memory

1.2. Short-term memory

1.3. Long term memory – storage and consolidation

2. Different memory systems in long-term memory

2.1. Non-declarative memory

2.2. Declarative memory

2.2.1. Episodic memory

2.2.2. Semantic memory

PART IV: Navigation Strategies: Identifying the mental representation of space

1. Navigation strategies and computational processes

1.1. Path integration

1.2. Goal or Beacon approaching

1.3. Stimulus-response strategy

1.4. Map-based strategy

1.5. Reinforcement learning to model stimulus-response and map-based strategies

2. Tasks to identify strategies and their neural basis

2.1. Stimulus-response and map-based strategies

2.2. The starmaze task and sequence-based navigation

3. Sequence-based navigation (or sequential egocentric strategy): what computational processes and what neural basis?

3.1. Sequential egocentric strategy

3.2. Neural basis of sequence learning in rodents

3.3. Hippocampus and sequential activity

3.3.1. Retrospective and prospective firing

3.3.2. Sequence representation

3.3.3. Time cells

METHODS FOCUS: FOS IMAGING

1. Fos imaging

2. Network analysis

2.1. Functional connectivity

2.2. Graph theory

Article 1: Complementary Roles of the Hippocampus and the Dorsomedial Striatum during Spatial and Sequence-Based Navigation Behaviour

1. Introduction

2. Main results

3. Discussion

Contents

4. Article

Article 2, in preparation: Functional connectome and learning algorithm for sequence-based navigation

1. Introduction

2. Methods

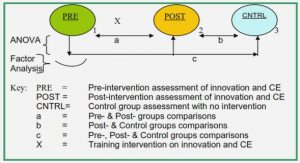

2.1. Behavioural study

2.2. Fos imaging

2.3. Computational learning study

2.4. Statistics

3. Results

4. Discussion

5. Supplementary material

GENERAL DISCUSSION

1. Hippocampus and sequence-based navigation

2. Model-free reinforcement learning with memory and sequence-based navigation

3. Network underlying sequence-based navigation

BIBLIOGRAPHY