Get Complete Project Material File(s) Now! »

Weakly Supervised Learning

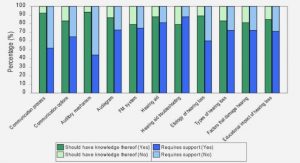

Despite excellent performances, deep ConvNets carry limited invariance proper-ties, i.e. small shift invariance through pooling layers (Weng et al. 1992; Durand, Thome, et al. 2015; Shang et al. 2016; Blot et al. 2016). This yields issues when transferring networks pre-trained for image classification, e.g. on ImageNet where objects are centered and well identified, to other tasks, such as object detection, where objects can be in any numbers and at any location and scale. To optimally perform domain adaptation in this context, it becomes necessary to align infor-mative image regions, e.g. by detecting objects (Oquab et al. 2015; Jaderberg et al. 2015), parts (N. Zhang, Donahue, et al. 2014; N. Zhang, Paluri, et al. 2014; B. Zhou, Khosla, et al. 2016; Kulkarni et al. 2016) or context (Gkioxari et al. 2015; Durand, Thome, et al. 2016). Although some works incorporate more precise annotations during training, e.g. bounding boxes (Oquab et al. 2014; Girshick, Donahue, et al. 2014), the increased annotation cost prevents its widespread use, especially for large datasets and pixel-wise labeling, i.e. segmentation masks (Bearman et al. 2016), as illustrated in Figure 1.6.

An option to gain strong invariance is to consider Weakly Supervised Learn-ing (WSL), where image regions are explicitly aligned to learn localized latent representations, better suited to transfer between tasks and datasets. WSL is a framework for learning problems where the available supervision contains only partial information about the expected outputs. In the general framework pre-sented in Section 1.2.1, this would mean that labels are now written as y? = (y?o, y?h) where only the observed label yo? is included in the dataset D = (xi, yo?,i) N , i=1 ? and therefore while the hidden part yh would still be evaluated by the metric, n o need to be predicted. A common example is weakly supervised detection or segmentation, where the annotations are at image level only, i.e. presence or absence of object class in image, but results are to be given at instance or pixel level. WSL methods therefore provide ways to learn latent representations suited to the task from weaker supervision.

WSL problems are often dealt with through a Multiple-Instance Learning (MIL) framework (Dietterich et al. 1997) as it provides several ways of selecting regions and extracting relevant information. In MIL, an image is considered as a bag of instances (regions) with annotations at the bag level (i.e. image level) only, and the main issue concerns the aggregation function to pool instance scores into a global prediction, in order to learn from available supervision.

Several approaches use a MIL framework for image classification, with differ-ences in handling positive and negative instances (Andrews et al. 2003; Felzen-szwalb et al. 2010; Durand, Thome, et al. 2015; Durand, Thome, et al. 2018b). Different strategies have been explored to combine deep models and variants of MIL, with various region selection mechanisms (Oquab et al. 2015; W. Li et al. 2015; B. Zhou, Khosla, et al. 2016; Durand, Thome, et al. 2016) MIL is also used in object detection by the seminal work of Deformable Part-based Model (DPM) (Felzenszwalb et al. 2010). It introduces a Latent Support Vector Machine (LSVM) classifier to detect parts that are dynamically located. This part-based representation fits objects tightly and is especially useful to model non-rigid objects. This idea has also been introduced into deep models with direct generalizations of DPM to ConvNet (Girshick, Iandola, et al. 2015; Wan et al. 2015), although with limited success compared to other DL approaches.

Multi-Task Learning

As noted in Section 1.2.1, overfitting is a big issue in ML, especially when there are few training data. Existing solutions include using additional data or supervision. When it is of different nature, e.g. images from another modality or supervision for another task, it brings complementary information that can be exploited to regularize models.

The goal of Multi-Task Learning (MTL) (Caruana 1997) is to use a single model to perform multiple related but distinct tasks. It learns a shared representation that generalizes to all addressed tasks by leveraging different objectives and datasets. Intuitively, some tasks directly relate to each other, e.g. depth and surface normal estimation (Zamir et al. 2018), and so knowledge is solving one should help doing so with the others by reusing learned insights. This is analyzed in Taskonomy (task-taxonomy, see Figure 1.7) (Zamir et al. 2018) to build a graph of amount of transfer between various common CV tasks, i.e. their synergies, in a principled way.

A MTL problem is defined as several learning tasks combined together, to be learned by a single model. The general framework from Section 1.2.1 can be slightly modified to suit MTL frame. Each example x would be associated with a label yt? for each task t. The dataset then becomes D = n(xi, y1,? i, . . . , y?T,i)o N for i=1 T tasks. Similar change needs to be applied to the loss function, with a dedicated one ‘t for each task, which are often summed, i.e. ‘(y, y?) = åtT=1 ‘t(yt, y ?t). It can be applied to multiple datasets, where each input is associated with a label for a given task, depending on the dataset the example comes from (Kokkinos 2017). It is also possible to use MTL on a single dataset annotated for multiple tasks, where ground truth labels are the concatenations of labels from all tasks (Zamir et al. 2018). In order to perform well, it is essential to encode hidden regularities and correlations between tasks, to benefit from synergy between them. This MTL strategy has been shown to improve results of individual tasks when learned together under certain conditions (Caruana 1997; Kokkinos 2017; Zamir et al. 2018). It also results in an efficient use of computational resources, where only one slightly bigger model is needed for all tasks simultaneously, compared to a different one used for each task separately. It is noticeable that this structure is employed in object detection, decomposed into two complementary tasks (object classification and bounding box localization) (Girshick 2015) or even more when also learning region proposal generation (S. Ren et al. 2015).

Although MTL is an old topic in ML, this is currently intensively revisited with DL. Deep MTL solutions consist in using different tasks and datasets and to share some intermediate representations of the networks between the tasks, which are optimized jointly (Bilen et al. 2016a; Kokkinos 2017; Meyerson et al. 2018). The crux of the matter is to define where and how to share parameters between tasks, depending on the applications. The common approach to MTL with DNNs is to have a feature extraction trunk shared between all tasks, with a dedicated prediction head for each task (Meyerson et al. 2018). However, it might not be optimal as different tasks often require features of different levels of abstraction, so the shared layers might be difficult to learn effectively. Some approaches focus on learning the optimal MTL architectures (Misra et al. 2016; Lu et al. 2017), while other explore relating every layer of the networks (Yang et al. 2017) or to relate layers at various depths to account for semantic variations between tasks (Meyerson et al. 2018). In UberNet (Kokkinos 2017), the goal is to learn a universal network which can share various low- and high-level dense prediction tasks, resulting in a very generic representation of images.

Motivations

When designing handcrafted features, they should be targeted to a particular task so that they encode all necessary information for it, while dropping all distracting factors. However, with the DL revolution that has happened over the last few years, features are no longer handcrafted but end-to-end learned by a network. There is not a total control over what features encode anymore, but this is left to be optimized by the model according to the learning objective, guided by the network and its structure. The focus has then shifted from feature design to network architecture design. A desired property of networks is to learn representations suited to the tasks at hand. This is achieved by integrating dedicated layers into the architecture, adapted to the level of available supervision, so that all relevant information is encoded by the model. This thesis studies three questions about network architecture design in order to improve extraction of latent information from various level of supervision:

Global pooling function. The main question in MIL and its variants is to define a global pooling function to convert all predictions at the instance level to a global one at the bag level. In WSL, the supervision is given at the image level only, i.e. presence or absence of classes in images, while the tasks might require predictions at the region level, e.g. object detection, or pixel-level, e.g. semantic segmentation. A structured pooling function considering the spatial nature of regions is then needed to learn localized representations that generalize to spatial tasks.

This question has been extensively studied in the context of deep ConvNets. The standard approach simply keeps the max-scoring region, i.e. the most informative one, for prediction (Oquab et al. 2015). It was extended to include several regions in the top instances model (W. Li et al. 2015) and with the Log-Sum-Exp pooling function (Sun et al. 2016), or all available regions with global average pooling (B. Zhou, Khosla, et al. 2016). Recent introduction of negative evidence (Parizi et al. 2015; Durand, Thome, et al. 2015; Durand, Thome, et al. 2016) has improved performances by also selecting min-scoring regions, which add evidence against some classes and help distinguish between similar classes. This is illustrated with an example in Figure 1.8.

The same question can also arise in object detection. This time, annotations are at the object level, e.g. with bounding boxes, so the learned representations are naturally localized within images. However, latent localization can be achieved within boxes, at the part level, in order to learn finer representations. Again, an aggregation function is required to score object regions (bag level) according to their parts (instance level), so that part localization can be learned.

This is the approach taken by DPM (Felzenszwalb et al. 2010), which learns to localize parts around objects so that the representations better fit them, especially for deformable objects where bounding boxes of fixed shape might be less adapted.

Each class is represented by a root filter describing whole appearances of objects and a set of latent part filters to model local parts. These are localized around the root with a new LSVM, identifying the best position, i.e. the max-scoring one, for each filter among all possible locations. This is done in a latent way as there is no annotation regarding the parts. Final detection output then simply sums scores from root and all parts at selected locations. Most deep part-based networks are direct generalizations of DPM to DL (Girshick, Iandola, et al. 2015; Wan et al. 2015), using the same mechanism to select part location, but reformulated under the ConvNet structure.

Imposing structure between MIL instances is a way to enhance their labeling by taking their relative interactions into account. This leads to finer models able to express more relations between all instances, that describe data more accurately and yield increased performances. Several works have studied this path, by explicitly modeling relations between instances from a bag (Z.-H. Zhou et al. 2009) or from different bags (Deselaers et al. 2010). However, this has not been fully explored in the context of deep ConvNets and could yield benefits.

In this thesis, we are interested in improving global pooling functions in WSL by combining positive and negative evidences in a finer way, and by introducing structure into them.

Efficient architecture for region computation. When computing local features with deep models, the most naive approach is to rescale each region into a fixed-size vector adapted to the ConvNet architecture, as done in early works for detection, e.g. R-CNN (Girshick, Donahue, et al. 2014), or scene understanding (He, X. Zhang, et al. 2015; Gong et al. 2014; Oquab et al. 2014; Durand, Thome, et al. 2015).

Since this approach is highly inefficient, there have been extensive attempts for using convolutional layers to share feature computation. However, fully connected layers are beneficial in standard deep architectures, e.g. AlexNet (Krizhevsky et al. 2012) or VGG (Simonyan et al. 2015), but remove spatial information and so should not be applied on whole images. They are still used at the end of the networks in order to keep performances, but lead to a loss of efficiency. The common approach is to compute convolutional image-wise feature maps, to project image regions into feature regions, and to apply the fully connected layers to each region separately. This strategy has been employed in image classification with sliding window regions (Oquab et al. 2015; Durand, Thome, et al. 2016; B. Zhou, Khosla, et al. 2016), and object detection with region proposals (Girshick 2015; S. Ren et al. 2015).

Another issue for deep part-based networks (Girshick, Iandola, et al. 2015; Wan et al. 2015) is that the fully connected layers cannot neither be applied after part optimization in a straightforward manner, so they are then removed completely from the network. However, this did not yield significant improvement compared to the previous state of the art in object detection, contrary to what DPM achieved.

A second line of work in this thesis is the design of efficient convolutional architectures to allow end-to-end learning from image regions in WSL or object parts in detection.

Learning with auxiliary supervision. Adding more supervision should further boost performances, as observed by MTL (Caruana 1997), by allowing to learn other aspects of data, e.g. new modalities. It could especially be useful when there are few training examples, as it should regularize the training as more data are available to learn from. However, in the case where the additional supervisions are not as important as the main task, but only auxiliary in order to help training, care must be taken.

Common MTL approaches fail to acknowledge this difference between tasks, and give equal credits to all of them (Kokkinos 2017). This ends in optimizing average performance across tasks, while prioritizing one task might yield better results for this particular task but lower overall, as the capacities of models are bounded (Kokkinos 2017). Therefore, it requires a way to break symmetry between tasks during training.

Since additional auxiliary supervision is only relevant in order to train a better model, Learning Under Privileged Information (LUPI) (Vapnik and Vashist 2009) seems to be an adapted framework. Here, some additional information is avail-able only during training, but not at inference time. LUPI also naturally breaks the symmetry between tasks, according to the availability of their annotations. Several deep LUPI approaches have been proposed (Hoffman et al. 2016; Shi et al. 2017), but representations from standard and privileged supervisions often have light coupling. There is still need for a way to extract latent structure from all supervisions and align them to enhance the representation of the main task.

The last direction of research presented in this thesis deals with exploring ways to fully leverage additional supervision to benefit a given task, especially in the situations with few training data.

Contributions and Outline

In the following of this thesis, we propose and evaluate solutions to the limi-tations of current approaches identified before in Section 1.3. Throughout this thesis, we deal with increasing amounts of supervision, ranging from image-level annotations, to additional auxiliary supervision.

Chapter 2: learning localized representations from image-level supervision MIL and its variants form an important framework for WSL. The main com-ponent is a global aggregation function allowing learning at the instance level from supervision at the bag level. Recently, negative evidence models have shown to bring complementary information to traditional MIL-based methods. We study ways to improve the pooling function with a better integration of negative evidence, as well as the introduction of structure between instances in order to learn richer models. In this chapter, we intro-duce WILDCAT, a model dedicated to learn localized representations from image-level supervision. For this, it uses a new global pooling function extending negative evidence models (Durand, Thome, et al. 2016) by differ-entiating contributions from positive and negative regions. It also builds structure with a multi-map transfer layer learning local features related to different class modalities in a weakly supervised way. This WSL strategy is integrated within a Fully Convolutional Network (FCN) to efficiently learn representations localized at the region level. WILDCAT is learned in an image classification setup, but can then be straightforwardly applied to WSL tasks, here pointwise object detection and semantic segmentation. This chapter is a joint work at equal contribution with Thibaut Durand.

However, most deep approaches using them either are learned in a strongly supervised setting, i.e. using part annotations, or do not fully exploit deep representations learned in ConvNets. We investigate the use of FCNs (Long et al. 2015) to perform MIL-based learning of deformable part-based representations within deep ConvNets at the object level, in order to learn fine representations from bounding box annotations. We introduce DP-FCN, a region-based object detector using latent deformable part-based representations to model objects and better fit them, especially when these are non-rigid. It exploits deformations for both detection sub-tasks: in object classification with a new MIL RoI pooling function identifying locations of parts, extending the ideas from DPM, and in bounding box regression with a deformation-aware localization module, leveraging computed deforma-tions to better estimate shapes of objects. A further improvement of DP-FCN explicitly models interactions between all parts of a same object, with a Conditional Random Field (CRF)-based formulation for classification, and with a bilinear product for localization. DP-FCN also solves the issue of part computation efficiency by extending the R-FCN architecture (Dai, Y. Li, et al. 2016) based on a FCN.

Additional supervision is a way to increase performance and to reduce overfitting, especially with few training data. MTL seems an interesting framework to deal with this, but most approaches equally consider the additional data and the original ones, defining the main task. We tackle the problem of learning with auxiliary supervision, i.e. when results on the additional tasks are not the goal, and formalize it as a particular kind of MTL, named primary MTL, where the goal is the main task only. We introduce ROCK, a generic fusion block learning task-specific representations from various supervisions, to address it. ROCK is designed with two features tailored to primary MTL: it uses a residual connection between main and auxiliary tasks to make forward predictions explicitly impacted by the intermediate auxiliary representations, and incorporates intensive pooling operators in auxiliary sub-networks for maximizing complementarity of intermediate representations between tasks. It is applied to object detection with both geometric (depth and surface normal estimation) and semantic (scene classification) auxiliary tasks.

Lastly, Chapter 5 presents conclusions and discusses several directions for future work.

This thesis is based on the material published in the following papers:

Thibaut Durand, Taylor Mordan, Nicolas Thome, and Matthieu Cord (2017). “WILDCAT: Weakly Supervised Learning of Deep ConvNets for Image Classification, Pointwise Localization and Segmentation”. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR);

Taylor Mordan, Nicolas Thome, Matthieu Cord, and Gilles Henaff (2017). “Deformable Part-based Fully Convolutional Network for Object Detection”. In: Proceedings of the British Machine Vision Conference (BMVC), Best Science Paper Award;

Taylor Mordan, Nicolas Thome, Gilles Henaff, and Matthieu Cord (2018a). “End-to-End Learning of Latent Deformable Part-Based Representations for Object Detection”. In: International Journal of Computer Vision (IJCV);

Taylor Mordan, Nicolas Thome, Gilles Henaff, and Matthieu Cord (2018b). “Revisiting Multi-Task Learning with ROCK: a Deep Residual Auxiliary Block for Visual Detection”. In: Advances in Neural Information Processing Systems (NIPS).

Related Work

To perform WSL, it is necessary to identify relevant information and to aggregate it to single values to learn from global labels. One option to detect informative image regions is to revisit the Bag-of-Words (BoW) model (Sivic et al. 2003), described in Section 1.2.2, by using deep features as local region activations (He, X. Zhang, et al. 2015; Gong et al. 2014; Goh et al. 2014) or by designing specific BoW layers, e.g. NetVLAD (Arandjelovic et al. 2016).

Global pooling function in deep ConvNets. Different semantic categories are often characterized by multiple localized attributes corresponding to different class modalities (see for example head and legs for the dog class in Figure 2.1). Several strategies for deep models have then been explored to pool scores from regions of images to base decisions on informative elements (see Section 1.2.4).

Max pooling (Oquab et al. 2015) only selects the max-scoring region, as it should be the most discriminative for the task. However, this is prone to errors in the selection process, since only one region is kept, and all context is discarded. An alternative is to include all regions with Global Average Pooling (GAP) (B. Zhou, Khosla, et al. 2016), to bring robustness and take context into account, but this can also consider lots of uninformative regions. Intermediate approaches only select some relevant regions as a trade-off: Log-Sum-Exp pooling (Sun et al. 2016), Learning from Label Proportion (Felix Yu et al. 2013; Lai et al. 2014), and top instance (W. Li et al. 2015). Negative evidence models (Parizi et al. 2015; Durand, Thome, et al. 2015; Durand, Thome, et al. 2016) explicitly select regions accounting for the absence of the class. These regions bring contextual information to help differentiate between similar classes, e.g. with background or other parts of objects. However, there is not a method that is uniformly better than all others, the choice of the best approach depending on the task (Durand, Thome, et al. 2018a).

WILDCAT also exploits negative evidence, but differentiates positive and negative terms. Its pooling function generalizes most other common ones. We further base our model on a Fully Convolutional Network (FCN) backbone architecture (He, X. Zhang, et al. 2016a) to allow for efficient end-to-end training of the whole network for all image regions simultaneously.

Other region selection mechanisms. Global pooling functions are not the unique way of basing decisions on image regions. Similarly to WSL, attention-based models (K. Xu et al. 2015; Jaderberg et al. 2015; J. Zhang et al. 2016; H. Xu et al. 2016) select relevant regions to support decisions. However, WSL methods usually include some structure on the selection process while it is implicit in attention-based approaches.

wildcat model

Combining different regions has also been addressed through explicit context modeling (Gkioxari et al. 2015), or by modeling region correlations in RRSVM (Wei et al. 2016). For fine-grained recognition, multi-feature detection has been tackled in the fully supervised setting (H. Zhang et al. 2016; D. Lin et al. 2015; N. Zhang, Donahue, et al. 2014) and in WSL (Krause et al. 2014). In WILDCAT, we propose to build structure into representations through a multi-map transfer layer, which relates features to class modalities, as depicted in Figure 2.1.

Weakly Supervised Learning for localization and segmentation. Several re-cent works have applied WSL to the tasks of object localization and semantic segmentation.

WSL localization can be addressed with label co-occurrence information and a coarse-to-fine strategy based on deep feature maps to predict object locations (Bency et al. 2016). ProNet (Sun et al. 2016) uses a cascade of two networks: the first generates bounding boxes and the second classifies them. Similarly, WSDDN (Bilen et al. 2016b) proposes a specific architecture with two branches dedicated to classification and detection.

Many WSL segmentation methods are based on MIL framework: MIL-FCN (Pathak, Shelhamer, et al. 2015) extends it to multi-class segmentation, MIL-Base (Pinheiro et al. 2015) introduces a soft extension of it, EM-Adapt (Papandreou et al. 2015) includes an adaptive bias into the framework, and Constrained CNN (CCNN) (Pathak, Krahenbuhl, et al. 2015) uses a loss function optimized for any set of linear constraints on the output space.

WILDCAT is not specific to localization nor segmentation. It is learned for image classification and the localized representations obtained can be straightforwardly used for spatial tasks such as these two, with minor changes in the predictor to adapt it to the task at hand.

WILDCAT Model

The overall WILDCAT architecture is depicted in Figure 2.2. It is based on a FCN which is suitable for spatial predictions (Long et al. 2015), allowing end-to-end training for all image regions by sharing computations (Section 2.3.1). All regions are encoded into multiple class modalities with a WSL multi-map transfer layer to build structure into the representations (Section 2.3.2). Feature maps are then combined separately to yield class-specific heatmaps that can be globally pooled to get a single probability for each class, using a new spatial aggregation module. This generalizes previous global pooling functions, including negative evidence models, with positive and negative contributions computed from different num-bers k+ and k of regions, and with an additional hyper-parameter controlling their relative importance (Section 2.3.3). It is finally applied to two WSL tasks,

28 learning localized representations from image-level supervision pointwise object localization and semantic segmentation (Section 2.3.4). We now delve into each point successively.

Table of contents :

1 introduction

1.1 Context

1.1.1 Scientific Context

1.1.2 Industrial Context

1.2 Visual Understanding Framework

1.2.1 Statistical Supervised Learning Framework

1.2.2 Image Classification

1.2.3 Object Detection

1.2.4 Weakly Supervised Learning

1.2.5 Multi-Task Learning

1.3 Motivations

1.4 Contributions and Outline

1.5 Related Publications

2 learning localized representations from image-level supervision

2.1 Introduction

2.2 Related Work

2.3 WILDCAT Model

2.3.1 Fully Convolutional Architecture

2.3.2 Multi-Map Transfer Layer

2.3.3 WILDCAT Pooling

2.3.4 WILDCAT Applications

2.4 Classification Experiments

2.4.1 Comparison with State of the Art

2.4.2 Further Analysis

2.5 Weakly Supervised Experiments

2.5.1 Weakly Supervised Pointwise Object Localization

2.5.2 Weakly Supervised Semantic Segmentation

2.5.3 Visualization of Results

2.6 Conclusion

3 learning part-based representations from object-level supervision

3.1 Introduction

3.2 Related Work

3.3 Deformable Part-based Fully Convolutional Networks

3.3.1 Fully Convolutional Feature Extractor

3.3.2 Deformable Part-based RoI Pooling

3.3.3 Classification and Localization Predictions with Deformable Parts

3.4 Experiments

3.4.1 Main Results

3.4.2 Ablation Study

3.4.3 Further Analysis

3.4.4 Comparison with State of the Art

3.4.5 Examples of Detections

3.5 Conclusion

4 learning task-related representations from auxiliary supervisions

4.1 Introduction

4.2 Related Work

4.3 ROCK: Residual Auxiliary Block

4.3.1 Merging of Primary and Auxiliary Representations

4.3.2 Effective MTL from Auxiliary Supervision

4.4 Application to Object Detection with Multi-Modal Auxiliary Information

4.5 Experiments

4.5.1 Ablation Study

4.5.2 Comparison with State of the Art

4.5.3 Pre-Training on Large-Scale MLT Dataset

4.5.4 Further Analysis

4.6 Conclusion

5 conclusion

5.1 Summary of Contributions

5.2 Perspectives for Future Work