(Downloads - 0)

For more info about our services contact : help@bestpfe.com

Table of contents

I gIF-type Spiking Neuron Models

1 gIF-type spiking neuron models

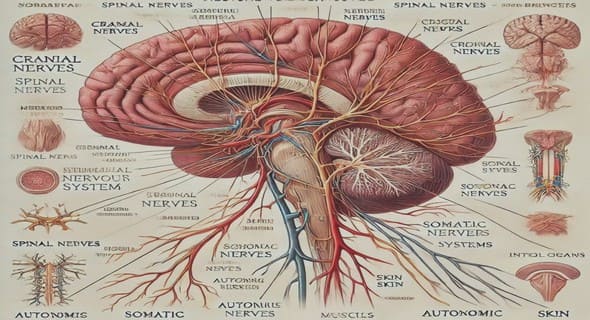

1.1 Neuron Anatomy

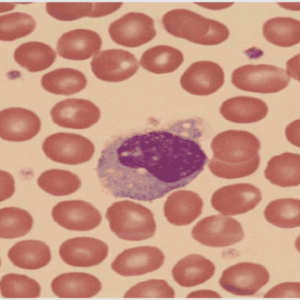

1.1.1 The spiking neuron

1.2 Discretized integrate and fire neuron models

1.2.1 From a LIF model to a discrete-time spiking neuron model

1.2.2 Time constrained continuous formulation

1.2.3 Network dynamics discrete approximation

1.2.4 Properties of the discrete-time spiking neuron model

1.2.5 Asymptotic dynamics in the discrete-time spiking neuron model

1.2.6 The analog-spiking neuron model

1.2.7 Biological plausibility of the gIF-type models

II Learning spiking neural networks parameters

2 Reverse-engineering in spiking neural networks parameters: exact deterministic estimation

2.1 Methods: Weights and delayed weights estimation

2.1.1 Retrieving weights and delayed weights from the observation of spikes and membrane potential

2.1.2 Retrieving weights and delayed weights from the observation of spikes

2.1.3 Retrieving signed and delayed weights from the observation of spikes

2.1.4 Retrieving delayed weights and external currents from the observation of spikes

2.1.5 Considering non-constant leak when retrieving parametrized delayed weights

2.1.6 Retrieving parametrized delayed weights from the observation of spikes

2.1.7 About retrieving delays from the observation of spikes

2.2 Methods: Exact spike train simulation

2.2.1 Introducing hidden units to reproduce any finite raster

2.2.2 Sparse trivial reservoir

2.2.3 The linear structure of a network raster

2.2.4 On optimal hidden neuron layer design

2.2.5 A maximal entropy heuristic

2.3 Application: Input/Output transfer identification

2.4 Numerical Results

2.4.1 Retrieving weights from the observation of spikes and membrane potential

2.4.2 Retrieving weights from the observation of spikes

2.4.3 Retrieving delayed weights from the observation of spikes

2.4.4 Retrieving delayed weights from the observation of spikes, using hidden units

2.4.5 Input/Output estimation

III Programming resetting non-linear networks

3 Programming resetting non-linear networks

3.1 Problem Position

3.2 Mollification in the spiking metrics

3.2.1 Threshold mollification

3.2.2 Mollified coincidence metric

3.2.3 Indexing the alignment distance

3.3 Learning paradigms

3.3.1 The learning scenario

3.3.2 Supervised learning scheme

3.3.3 Robust learning scheme

3.3.4 Reinforcement learning scheme

3.3.5 Other learning paradigms

3.4 Recurrent weights adjustment

3.5 Application to resetting non-linear (RNL) networks

3.5.1 Computational properties

3.6 Preliminary conclusion

IV Hardware Implementations of gIF-type Spiking Neural Networks

4 Hardware implementations of gIF-type neural networks

4.1 Hardware Description

4.1.1 FPGA (Field-Programmable Gate Array)

4.1.2 GPU (Graphics Processing Unit)

4.2 Programming Languages

4.2.1 Handel-C

4.2.2 VHDL (VHSIC hardware description language; VHSIC: very-high-speed integrated circuit)

4.2.3 CUDA (Compute Unified Device Architecture)

4.3 Fixed-point arithmetic for data representation

4.3.1 Resolution for the fixed-point data representation

4.3.2 Range for the fixed-point data representation

4.3.3 A precision analysis of the gIF-type neuron models

4.4 FPGA-based architectures of gIF-type neuron models

4.4.1 A FPGA-based architecture of gIF-type neural networks using Handel-C

4.4.2 A FPGA-based architecture of gIF-type neural networks using VHDL

4.5 GPU-based implementation of gIF-type neuron models

4.6 Numerical Results

4.6.1 Synthesis and Performance

V Conclusion

5 Conclusion

A Publications of the Author Arising from this Work

Publications of the Author Arising from this Work

Bibliography