(Downloads - 0)

For more info about our services contact : help@bestpfe.com

Table of contents

General Introduction

1 Neurosciences

1.1 Historical notes

1.2 Basic notions

1.2.1 Neurons and action potentials

1.2.2 The synapse

1.3 Spatial organization of the brain

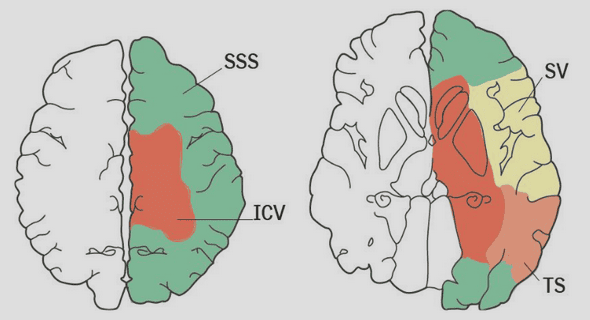

1.3.1 General overview

1.3.2 The mammalian cortex: from functional localization to microscopic organization

1.4 Mathematical models in Neuroscience

1.4.1 The neural code and the firing-rate hypothesis

1.4.2 Synaptic models in Neural Networks

1.4.3 Heterogeneities and noise

1.4.4 Neural fields

2 The mean-field approach

2.1 Mean-field formalism

2.2 Initial conditions and propagation of chaos

2.3 Heterogeneous systems: averaged and quenched results

3 Mean-field theory for neuroscience

3.1 A short history of rigorous mean-field approaches for randomly connected neural networks

3.2 Coupling methods for weak interactions

3.3 Large deviations techniques for Spin Glass dynamics

3.3.1 Setting

3.3.2 Frame of the analysis

3.3.3 Main results

II Large deviations for heterogeneous neural networks

4 Spatially extended networks

4.1 Introduction

4.1.1 Biological background

4.1.2 Microscopic Neuronal Network Model

4.2 Statement of the results

4.3 Large Deviation Principle

4.3.1 Construction of the good rate function

4.3.2 Upper-bound and Tightness

4.4 Identification of the mean-field equations

4.4.1 Convergence of the process

4.5 Non Gaussian connectivity weights

4.6 Appendix

4.6.1 A priori estimates for single neurons

4.6.2 Proof of lemma 4.2.2: regularity of the solutions for the limit equation

4.6.3 Non-Gaussian estimates

5 Network with state-dependent synapses

5.1 Introduction

5.2 Mathematical setting and main results

5.3 Large deviation principle

5.3.1 Construction of the good rate function

5.3.2 Upper-bound and Tightness

5.4 Existence, uniqueness, and characterization of the limit

5.4.1 Convergence of the process and Quenched results

III Phenomenology of Random Networks

6 Numerical study of a neural network

6.1 Introduction

6.2 Mathematical setting and Gaussian characterization of the limit

6.2.1 Qualitative effects of the heterogeneity parameters

6.2.2 The generalized Somplinsky-Crisanti-Sommers Equations

6.3 Numerical results on Random Neural Networks

6.3.1 One population networks

6.3.2 Multi-population networks

6.3.3 Heterogeneity-induced oscillations in two-populations networks

6.4 Discussion

7 Study of the Random Kuramoto model

7.1 Introduction

7.2 Presentation of the model

7.3 Kuramoto model in neuroscience

7.4 Application of our results to the random Kuramoto model

7.4.1 Comparison with previous results

7.4.2 Numerical analysis of the phase transition

IV General Appendix

8 Generalities

8.1 A few classical theorems from probability theory

8.2 Gaussian Calculus

8.3 Stochastic Fubini Theorem

9 Large Deviations

9.1 Preliminaries

9.2 Preliminary results, and Legendre transform

9.3 Cram´er’s Theorem

9.4 General Theory

9.4.1 Basic definitions and properties

9.5 Obtaining a LDP

9.5.1 Sanov’s theorem

9.5.2 Gartn¨er-Ellis Theorem

9.6 Deriving a LDP

9.6.1 Remarks on Legendre transform and convex rate functions

9.6.2 Non-convex Fenchel duality

9.7 Large Deviations Appendix

9.7.1 Sanov’s theorem for finite sequences

9.7.2 Proof of abstract Varadhan setting

General Notations

List of figures

Bibliography