Get Complete Project Material File(s) Now! »

Teaching accreditation exams in France favor women in male-dominated disciplines and men in female-dominated fields

Introduction

Why are women underrepresented in most areas of science, technology, engineering, and mathematics (STEM)? One of the most common explanations is that a hiring bias against women exists in those fields (West and Curtis, 2006; Shalala et al., 2006; Hill et al., 2010; Sheltzer and Smith, 2014). This explanation is supported by a few older experiments (Swim et al., 1989; Foschi et al., 1994; Steinpreis et al., 1999), a recent testing with fictitious resumes (Moss-Racusin et al., 2012), and a recent lab experiment (Reuben et al., 2014), suggesting that the phenomenon still prevails.

However some scholars have challenged this view (Ceci and Williams, 2011; Ceci et al., 2014) and another recent testing with fictitious resumes finds opposite results, namely a bias in favor of women in academic recruitment (Williams and Ceci, 2015). Studies based on actual hiring also find that when women apply to tenure-track STEM positions, they are more likely to be hired (National Research Council, 2010; Wolfinger et al., 2008; Glass and Minnotte, 2010; Irvine, 1996). However, those studies do not control for applicants’ quality and a frequent claim is that their results simply reflect the fact that only the best female PhDs apply to these positions while a larger fraction of males do so (Ceci et al., 2014; National Research Council, 2010). One study did control for applicants’ quality and reported a bias in favor of women in male-dominated fields (Breda and Ly, 2015), but it has limited external validity due to its very specific context. The present analysis is based on a natural experiment over 200,000 individuals participating in competitive exams for primary, secondary and college/university teaching positions in France over the period 2006-2013, and it has two distinct advantages over all previous studies. First, it provides the first large-scale real-world evidence on gender biases in both evaluation and hiring, and how those biases vary across fields and contexts. Second, it offers possible explanations for the discrepancies between existing studies. Those discrepancies may be explained by various factors, ranging from experimental conditions, contexts, type of evaluations made (e.g. grading or hiring), and the math-content of the exams. We hypothesize that two moderators are important to understand what shapes evaluation biases against or in favor of women: the actual degree of female under-representation in the field in which the evaluation takes place and the level at which candidates are evaluated, from lower-level (primary and secondary teaching) to college/university hiring.

Carefully taking into account the extent of under-representation of women in 11 aca-demic fields allows us to extend the analysis beyond the STEM distinction. As pointed out recently (Ceci et al., 2014; Williams and Ceci, 2015; Breda and Ly, 2015; Leslie et al., 2015), the focus on STEM versus non STEM fields can be misleading to understand fe-male underrepresentation in academia as some STEM fields are not dominated by men (e.g. 54% of U.S. Ph.Ds. in molecular biology are women) while some non-STEM fields, including humanities, are male-dominated (e.g. only 31% of U.S. PhDs. in philosophy are women 1). The underrepresentation of women in academia is thus a problem that is not limited to STEM fields. A better predictor of this underrepresentation, some have argued, is the belief that innate raw talent is the main requirement to succeed in the field (Leslie et al., 2015).

The level at which the evaluation takes place matters because stereotypes (or political views) can influence behavior differently if evaluators face already highly skilled applicants (as in Williams and Ceci 2015; Breda and Ly 2015) or moderately skilled ones (as in Ceci and Williams 2011; Ceci et al. 2014). By their mere presence among the pool of applicants for a high-level position, candidates signal their motivation and potential talent, whereas this is less true at a lower level, such as primary school teaching. Females who have mastered the curriculum, and who apply to high-skill jobs in male-dominated fields signal that they do not elicit the general stereotypes associating quantitative ability with men. This may induce a rational belief reversal regarding the motivation or ability of those female applicants (Fryer and Levitt, 2010), or a so-called “boomerang effect” (Heilman et al., 1988) that modifies the attitudes towards them. Experimental evidence provides support for this theory by showing that gender biases are lower or even inverted when information clearly indicates high competence of those being evaluated (Heilman et al., 1988; Koch et al., 2015).

To study how both female underrepresentation and candidates’ expected aptitudes can shape skills assessment, we exploit the two-stage design (written then oral tests) of the three national exams used in France to recruit virtually all primary-school teach-ers (CRPE), middle- and high-school teachers (CAPES and Agrégation), as well as a large share of graduate school and university teachers (Agrégation). A college degree is necessary to take part in those competitive exams. Except for the lower level (CRPE), each exam is subject-specific and typically includes 2 to 3 written and oral tests taken roughly at the same time. Importantly, oral tests are not general recruiting interviews: depending on the subject, they include exercises, questions or text discussions designed to assess candidates’ fundamental skills, exactly as are written tests. All tests are graded by teachers or professors specialized in the subject, except at the lower-level where a non-specialist sometimes serves on a 2-to-3 examiner panel along with specialists. 80% of evaluators at the highest-level exam (Agrégation) are either full-time researchers or university professors in French academia. The corresponding statistics is 30% at the medium level exam (CAPES).

Our strategy exploits the fact that the written tests are “blinded” (candidates’ name and gender are not known by the professor who grades these tests) while the oral tests are obviously not. Providing that female handwriting cannot be easily detected – which we discuss later -, written tests provide a counterfactual measure of students’ cognitive ability in each subject.

The French evaluation data offers unique advantages over previously-published exper-iments; they provide real-world test scores for a large group of individuals, thus they avoid the usual problem of experiments’ limited external validity. At the same time, these data present a compelling “experiment of nature” in which naturally-occurring variations can be leveraged to provide controls. A final advantage is to draw on very rich administrative data that allow numerous statistical controls to be applied.

Institutional background

Competitive exams to recruit teachers in France

Teachers in France are recruited through competitive exams, either internally from al-ready hired civil servants (usually already holding a teaching accreditation) or externally from a pool of applicants who are not yet civil servants. Candidates to private and public schools are recruited through the same competitive exams but they have to specify their choice at the time of the registration. The final rankings are distinct. We have data and therefore focus on the three competitive exams used to recruit teachers externally for positions in public schools or public higher education institutions (such as prep schools and colleges/universities, see below). More than 80% of all new teaching positions in France are filled with candidates that have passed one of these three exams.

Systematic non-anonymous oral and anonymous written tests

The competitive exams for teaching positions first comprise an “eligibility” stage in the form of written tests taken in April. All candidates are then ranked according to a weighted average of all written test scores; the highest-ranked students are declared eligi-ble for the second stage (the eligibility threshold is exam-specific). This second “admis-sion” stage takes place in June and consists of oral tests on the same subjects (see Table 1.1). Importantly, oral test examiners may be different from the written test examiners and they do not know what grades students have obtained on the written tests. Students are only informed about their eligibility for oral tests two weeks before taking them and are also unaware of their scores on the written tests. After the oral tests, a final score is computed as a weighted average of all written and oral test scores (with usually a much higher weight placed on the oral tests). This score is used to create the final ranking of the eligible candidates in order to admit the best ones. The number of admitted candidates is usually equal to the number of positions to be filled by the recruiting body and is known by all in advance.

Competitive exams based on written and oral tests are very common in France: they are typically used to recruit future civil servants, as well as students in France’s most prestigious higher education institutions (see details in Breda and Ly 2015). Each year, hundreds of thousands of French citizens take such exams. Historically, most of these exams only included oral tests or oral interviews, but the growing number of candidates over time led the exams’ organizers to add a first stage selection of candidates that is based on written tests, which are less costly to evaluate than the second stage oral exams. These exams are thus widespread in French society, and something most candidates are familiar with.

Exams at three different levels

We exploit data on three broad types of exams: the Agrégation, the CAPES (Certificat d’Aptitude au professorat de l’enseignement du second degré) and the CRPE (Concours de Recrutement des Professeurs des Ecoles). As explained below, the Agrégation exam is partly geared toward evaluating potential candidates for professorial hiring.

Higher level exam: Agrégation

The most prestigious and difficult of those exams is the Agrégation. It has strong histor-ical roots. For example, it dates back to 1679 in Law, 1764 in Arts, and started to spread to other fields in 1808. It is a field-specific exam, meaning that candidates take it in a given subject in order to get the accreditation to teach that subject only. Although there are roughly forty fields of specialization, a dozen of them comprise 80% of both positions and candidates. We focus exclusively on these dozen fields for the present study. Once candidates have chosen a particular subject, they are tested only in that subject, with the exception of a short interview aimed to detect their ability to « behave as an ethical and responsible civil servant » (see below).

Agrégation is highly selective and only well-prepared candidates with a strong back-ground in their field of study have a chance to pass it. Even among those well-prepared candidates, admission rates are around 12.8% (See Table 1.1). Since the reform of 2011, candidates at Agrégation must hold at least a masters’ degree (before that, the Maîtrise diploma, which is obtained after four completed years of college, was sufficient). Passing the Agrégation exam is necessary to teach in higher education institutions such as the selective preparatory school that prepare during two years the best high-school graduates for the competitive entrance exams to the French Grandes Ecoles (such as Ecole Polytechnique, Ecole Normale Supérieure, Ecole Centrale, HEC, etc.). They also give access to university full-teaching positions (PRAG). These positions are for example taken by PhDs who did not manage (yet) to get an assistant professor position. In total, about a fourth of the individuals who have passed Agrégation teach in postsecondary education.

Agrégation and CAPES holders both teach in middle and high-school. However, Agrégation holders are rarely appointed to middle schools and have on average much higher wages, fewer teaching hours, and steeper career paths in secondary education.

Although there is no official link between the Agrégation exam and academia, it is well-known that the two are related in practice. First, a large majority of examiners at Agrégation are full-time researchers or professors at university (see statistics in section 1.2.4). Then, on the candidates’ side, holding the Agrégation can help for an academic career in some fields and a significant fraction of researchers actually hold this diploma. Conversely, according to the French association of Agrégation holders, about 15% of Agrégation holders who teach in high-school have a PhD. Some of the most prestigious higher education institutions, the Ecoles Normale Supérieure, select the best undergrad-uate students and prepare them for both a teaching and an academic career. Two of those three institutions command to all their students to take the Agrégation exam, even if they are only interested in an academic careers. The historical role played by the Agré-gation and its rankings among the French intellectual elite might be best summarized by an anecdote. In 1929, Jean-Paul Sartre and Simone De Beauvoir both took and passed the philosophy Agrégation exam. Jean Paul Sartre was ranked first while Simone De Beauvoir was ranked second. Both became very famous philosophers and life partners. However many specialists considered that Simone De Beauvoir was scholarly better, and should have been ranked first instead of Jean-Paul Sartre. As a matter of fact, Sartre had already taken and failed this exam in 1928, while De Beauvoir got it at her first try. This illustrates the toughness of this exam, its informal links with academia (it is taken and graded by many (future) academics), and the fact that the patterns observed nowadays in our data may have not always prevailed.

Medium level exam: CAPES

CAPES is very similar to Agrégation but the success rate is higher (23% against 12.8% for Agrégation, see Table 1.2) due to lower knowledge requirements. CAPES and Agrégation are not exclusive: each year, about 600 individuals take both exams. Only 4.4% of them pass Agrégation, whereas they are a much larger share (18.19%) to pass CAPES (see Table 1.2). Candidates at CAPES also need to hold a Master’s degree or a Maîtrise. CAPES holders cannot have access to most positions in higher education and they teach exclusively in secondary education. Finally, and not surprisingly, CAPES is seen as less prestigious than Agrégation.

Lower level exam: CRPE

CRPE is exclusively aimed at recruiting non-specialized primary-school teachers. It is a non-specialized exam with a series of relatively low-level tests in a wide range of fields (maths, french, history, geography, sciences, technologies, art, literature, music and sport). In that sense it is very different from CAPES and Agrégation.

Two to three examiners at each test

All three exams include a series of written and oral tests. By law, each individual test needs to be graded by at least two evaluators. Written tests are usually graded twice, while the examination panel for each oral test typically includes three members, usually not of the same gender (even if it is sometimes hard to respect this rule for practical reasons). At the higher-level (Agrégation) and medium-level (CAPES) exams, examiners are always specialists in the exam field and they usually had passed the exam in the past (at least 50% of them). We collected data on the composition of the examiner pan-els for every field and exam level over the period 2006-2013. We found that evaluators are typically teachers in secondary or post-secondary schools (15% at the higher-level and 54% at the medium-level exam), full-time researchers, professors or assistant profes-sors at the university (76% at the higher-level and 30% at the medium-level exam) or teaching inspectors (9% at the higher-level and 16% at the medium-level exam). They know perfectly the program on which candidates are tested, and they grade the tests accordingly.

The lower-level exam is not field-specific but it includes both a written and an oral test in math and in literature since 2011. Each two-to-three examiners panel includes non-specialists and generally at least one specialist in the subject matter.

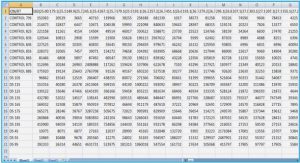

Data

The data used in these analyses belong to the French Ministry of Education and is made available on contractual agreement (which defines the conditions of access and use, and ensures confidentiality). The data provide information on every candidate taking the CRPE, CAPES and Agrégation exams over the period 2006-2013. For each and every exam, the data provides the aggregated scores of the candidates on the written and oral examinations. These scores are weighted averages of the scores obtained on all written and all oral tests (the weights are predefined and known by all examiners and candidates in advance). The aggregated score on written tests establishes a first-stage ranking of the candidates that is used to decide who is eligible to take the oral tests. After the oral tests, a final score is computed for eligible candidates as the sum of the oral and written tests aggregated scores. This final score is used to establish a second-stage ranking and decide which candidates are admitted. The data also include information on the socio demographic characteristics of the candidates, including sex, age, nationality, highest diploma, family and occupational status.

The detailed scores for the first six tests in each competitive examination (except for the period 2007-2010 for the CRPE, for which no detailed information is available) are also collected. The reason why only a subset of six test scores is available in addition to the total scores on the oral and written tests is that the Ministry of Education has arbitrarily formatted the data collected each year at each exam in a way that prevents storing more information. This arbitrary truncation implies that we miss some detailed scores in the exams that include more than six tests in total. In practice, between one (e.g. Mathematics) and five (e.g. Modern Literature) oral tests scores are missing for the high-level examination (see Table 1.17).

The data is exhaustive. In particular, it contains about thirty CAPES and Agrégation exams in small subfields that we have not analyzed, either because the sample sizes are too small (e.g. 10 observations per year at the grammar Agrégation) or because they appear too atypical as compared to traditional academic fields (e.g. jewelry, banking, audiovisual). Out of the 20 different foreign or regional language CAPES and Agrégation exams, we have kept only the four main ones for which we have significant sample sizes (English, Spanish, German and Italian) and grouped them into one single field labeled « Foreign languages ». Finally, in each field that we consider, we have retained in the analyses only candidates eligible for the oral tests who indeed took all written and oral tests 2. However, even after this data cleaning, the sample sizes are still very large: about 18,000 candidates at the Agrégation, 70,000 at the Capes and more than 100,000 at the CRPE. Descriptive statistics are provided in Tables 1.1 to 1.3. Most major academic fields are represented in our final sample (see Table 1.3).

For each competitive examination, candidates take between two and six written tests and between two and five oral tests, depending on the field. Even when they differ across fields, the way those tests are framed share similarities. In Mathematics, Physics and Chemistry, the written tests consist of problems, supplemented by a few questions, to assess the scientific knowledge of the candidate. In Philosophy, History, Geography, Biol-ogy, Literature and Foreign languages, the written tests systematically include an « essay ». This exercise is very widespread in secondary education and in the recruitment of French civil servants. It consists in a coherent and structured writing test in which the candidates develop an argument based on their knowledge, sometimes using several documents. It is typically based on a general question or citation (Literature and Philosophy), a concept (History and Geography), a phenomenon (Economics and Social sciences), or a statement (Biology and Geology) that needs to be discussed.

Oral tests always include a « lesson ». This is the case for all exams and in all fields. The « lesson » is a structured teaching sequence on a given subject. The presentation ends up with an interview in which the examiners challenge the candidate’s knowledge and, to some extent, her pedagogical skills. The “lessons” in mathematics and literature were only added to the CRPE after the 2011 reform.

Finally, a test entitled “Behave as an Ethical and Responsible Civil Servant” (BERCS) was introduced in 2011 for all three levels of recruitment (CRPE, Capes, Agrégation). It consists of a short oral interview. In the medium- and high-level exams, this interview is a subpart of an oral test that otherwise attempts to evaluate competence in the core subject. It is consequently graded by teachers or professors specialized in the core subject. In the lower-level exam, it is graded as a subpart of the literature test. We only have data on detailed scores on the BERCS test at the lower- and medium-level exams. A description of all tests, all exams and all fields is provided in Tables 1.16 and 1.17.

Table of contents :

Introduction

1 Teaching accreditation exams in France favor women in male-dominated disciplines and men in female-dominated fields

1.1 Introduction

1.2 Institutional background

1.2.1 Competitive exams to recruit teachers in France

1.2.2 Systematic non-anonymous oral and anonymous written tests

1.2.3 Exams at three different levels

1.2.4 Two to three examiners at each test

1.3 Data

1.4 Method

1.4.1 Percentile ranks

1.4.2 Variations in percentile ranks between oral and written tests (DD)

1.4.3 Odds ratios and relative risks

1.4.4 Using total scores on written and oral tests or keeping only one written and one oral test

1.4.5 A simple linear model to derive econometric specifications

1.4.6 Statistical models used to assess the gender bias on oral tests in each field and at each level

1.4.7 Using initial scores instead of percentile ranks

1.4.8 Statistical model to assess how the gender bias on oral test varies from a subject to another one

1.4.9 Statistical model to assess how the relationship between subjects’ extent of male-domination and gender bias on oral test varies between the medium- and the high-level exams

1.4.10 Clustering standard errors

1.5 Results

1.5.1 Gender differences between oral and written test scores at exams to recruit secondary school and postsecondary professorial teachers

1.5.2 A clear pattern of rebalancing gender asymmetries in academic fields, strongest at the highest-level exam, and invisible at the lower-level exam

1.5.3 Implications for the gender composition of recruited teachers and professors in different fields

1.5.4 Gender of evaluators

1.5.5 Comparison of an oral test that is common across all exams

1.6 Discussion

1.6.1 Handwriting detection

1.6.2 Gender differences in the types of abilities that are required on oral and written tests

1.6.3 Results from statistical models DD, S, and S+IV at the mediumand higher-level exams

1.6.4 Results from statistical models DD, S, and S+IV at the lower-level exams

1.6.5 Analysis of the effect of the gender composition of the examiner panels

1.7 Conclusion

2 Understanding the effect of salary, degree requirements and demand on teacher supply and quality: a theoretical approach

2.1 Introduction

2.2 Related literature

2.3 The effect of wage

2.3.1 A simple model

2.3.2 Implication for teacher supply

2.3.3 Implication for teacher skills

2.4 The effect of labor demand

2.4.1 Model

2.4.2 Implication for teacher supply

2.4.3 Implication for teacher skills

2.5 The effect of degree requirement

2.5.1 Educational and professional choices when teachers are required to have a bachelor’s degree

2.5.2 Educational and professional choices when teachers are required to have a masters’ degree

2.6 Conclusion

Appendix

3 How does the increase in teachers’ qualification levels affect their supply and characteristics?

3.1 Introduction

3.2 How to measure teacher quality?

3.3 Institutional background

3.3.1 The recruitment of public primary school teachers

3.3.2 Reform of teachers’ diploma level

3.4 Data and descriptive statistics

3.4.1 Teacher qualifications

3.4.2 Teacher supply and teacher demand

3.4.3 Teacher skills

3.4.4 Teacher diversity

3.4.5 Teacher salaries

3.5 Exploratory analysis when teachers are recruited at the bachelor level (before 2011)

3.5.1 Relationship between teacher supply, teacher demand and salary

3.5.2 Relationship between teacher characteristics, teacher demand and salary

3.5.3 Relationship between diploma level and teacher characteristics

3.6 Model

3.7 Results

3.7.1 Effect of a higher degree requirement on teacher supply

3.7.2 Effect of a higher diploma requirement on teacher characteristics

3.8 A placebo test

3.9 Discussion

3.9.1 Is there a link between the qualification reform and teacher shortages?

3.9.2 Short-term or long-term effects?

3.10 Conclusion

Tables

Figures

Appendix

A Primary school teacher recruitment examinations are not standardized in France

B Scope of the analysis

C Descriptive statistics

D A first method for estimating scoring biases between regions

E A second method for estimating scoring biases between regions and years

E.1 Descriptive evidence and preliminary analysis

E.2 Model

E.3 Results

F Robustness checks

Tables

Figures

4 How school context and management influence sick leave and teacher departure

4.1 Introduction

4.2 Literature review

4.3 Context

4.3.1 Absences in the French education system

4.3.2 Recruitment and wage in (secondary) education

4.3.3 Mobility

4.3.4 Teachers in deprived areas

4.3.5 School principals and vice-principals

4.4 Data and descriptive statistics

4.4.1 Sources

4.4.2 Descriptive statistics

4.5 Method

4.5.1 Specification

4.5.2 Estimation

4.6 Results

4.6.1 Contribution of teachers, schools and principals to the annual duration of teacher absences

4.6.2 What is the overall impact of schools and principals on teachers absences? A counterfactual analysis

4.6.3 Relationship between the contribution of principals and schools to teacher absences and turnover

4.6.4 Who leaves schools that contribute most to absences?

4.6.5 Relationship between school effects and school characteristics

4.6.6 What are the working conditions and psychosocial risks factors associated with schools’ and principals’ effects on teacher absences?

4.6.7 What is the relationship between teacher health and school and principal effects on absenteeism?

4.6.8 Has the increase in prevention from 2014 been more intense in schools (and among school principals) that increase teacher absences?

4.6.9 Are newly recruited teachers assigned to more favourable work environments?

4.7 Robustness checks

4.7.1 Exogeneous mobility assumption

4.7.2 Is the most appropriate model additive or multiplicative?

4.7.3 What is the impact of log-linearisation on estimates?

4.7.4 Are absences partly explained by peer effects?

4.8 Conclusion

Tables

Figures

Conclusion: Policy implications, pending issues and future researches