Get Complete Project Material File(s) Now! »

Preliminaries and Notations

In this chapter some graph theory terminologies (Section 2.1), some network ow problems (Section 2.2), and some denitions in polyhedral theory (Section 2.3) are given. The de-nitions, terminologies, lemmas and algorithms for graph theory and network programming are taken from Bazaraa et al. (1990), Murty (1992), Graham et al. (1995), and Nemhauser and Wolsey (1999). The denitions in polyhedral theory and polyhedral combinatorics are taken from Papadimitriou and Steiglitz (1982), Grˆtschel and Padberg (1985), and Schrijver (1995).

Graph Theory Preliminaries

Let V be a nite nonempty set of elements called nodes or vertices. Let E be a subset of V with its elements called edges, denoted as e = (v; u) 2 E, where u and v are called the endpoints of e. An edge e 2 E is called incident to some node v 2 V , if node v is an endpoint of e. If for an edge in E, the edge is directed from one endpoint to the other, it is called a directed edge or an arc, otherwise it is called an undirected edge. Throughout this study we denote both directed and undirected edges as (v; u) and specify whether this representation indicates a directed edge or not. The set of arcs is usually denoted as A.

A graph (directed graph) is dened using a set of nodes and a set of edges (arcs), and it is denoted by G = (V; E)(G = (V; A)). A graph is called an undirected (directed) graph if all of its edges are undirected (directed). A directed graph is also known as digraph. A graph with both directed and undirected edges is called a mixed graph. If a graph contains all possible edges between all the nodes, it is called a complete graph. A node in a digraph with no entering arcs (exiting arcs) is called a source node (sink node).

Given a graph G = (V; E), the set of edges that are incident to a node v 2 V are denoted by (v). If G is a digraph then +(v) is the set of edges incident to v that are directed out of v, and (v) is the set of edges incident to v that are directed towards v. The degree of a node is the number of edges incident to that node.

Network Flow Problems

graph are called adjacent to each other if there exists an edge with its endpoints being these two nodes. A node sequence (v0; :::; vk) in G is called a path if no repetitions in the sequence exists, and if (vi 1; vi) 2 E, for all i = 1; :::; k. A node sequence (v0; :::; vk), for some 3 k jV j, is called a cycle if v0 = vk, and if the sequence (v0; :::; vk 1) is a path.

A cycle that contains all the node in V is called a Hamiltonian cycle.

Let E(U) = f(i; j) 2 Eji; j 2 Ug, for some U V in graph G. A graph G0 = (V 0; E(V 0)) is called a subgraph of G if V 0 V . A subgraph G0 = (V 0; E(V 0)) of G is called a component of G, if and only if there is a path between any two nodes in V 0 but not between any of the nodes from V 0 and V n V 0. If a graph has only one component, it is called connected. An edge of a connected graph that does not lie in any cycle of the graph is called a bridge. A connected graph with no cycles is called a tree. Given a digraph, a strongly connected component of the graph is a subset of V such that for any given set of vertices u and v in the component, there is a path from u to v.

Denition 2.1.1. A graph G = (V; E) is called a bipartite graph if V can be partitioned into two nonempty subsets V1 and V2 such that every edge in E has an endpoint in V1 and another endpoint in V2. A bipartite graph is generally denoted as G = (V1; V2; E).

Denition 2.1.2. Given a digraph G = (V; A), let some function u : A(G) ! R+, called the capacities of the edges in G, be dened. A network is denoted by N = (G; u; s; t), where s and t in V are the sink and the source nodes respectively.

In the next section some network ow problems and some well known algorithms for these problems are given.

The Ford-Fulkerson Algorithm

Ford and Fulkerson (1956a) have suggested in their seminal work an algorithm to nd the maximum ow in a network (the Ford-Fulkerson algorithm). It starts with any feasible ow x, e.g. x = 0. Given the residual network, the algorithm nds a path from the source node to the sink node. Such a path is called an augmenting path. The algorithm then sends some ow along the augmenting paths and updates ow capacities along the arcs of the residual network (Algorithm 1), and it terminates when no more augmenting path can be found. According to Theorem 4.4 in Nemhauser and Wolsey (1999), a feasible ow f is maximum if and only if no augmenting path with respect to ow f exists.

The Preow-Push Algorithm

An algorithm for nding the maximum ow in a single commodity network, known as the preow-push algorithm is suggested by Goldberg and Tarjan (Goldberg and Tarjan, 1988). According to Ahuja et al. (1993), the best preow-push algorithms can outperform the best augmenting path algorithms. Let preow be a function

Finding Arcs with Frozen Flow in a Network

To nd arcs with frozen ow in a network, some cycles in the network called the traversable cycles need to be used. These cycles can be dened using the following defnitions.

1 Introduction

1.1 A Brief History of the Traveling Salesman Problem

1.2 Combinatorial Optimisation Problems

1.3 Complexity of Computational Problem

1.4 The Traveling Salesman Problem (TSP)

1.5 Heuristics for the TSP

1.7 The Layout of This Thesis

2 Preliminaries and Notations

2.1 Graph Theory Preliminaries

2.2 Network Flow Problems

2.3 Polyhedral Theory and Polyhedral Combinatorics

3 Various Formulations for the TSP

3.1 Flood

3.2 Dantzig, Fulkerson and Johnson

3.3 Miller, Tucker and Zemlin

3.4 Fox, Gavish and Graves

3.5 Wong

3.6 Picard and Queyranne

3.7 Claus

3.8 Carr

3.9 Comparison of the TSP Formulations

4 The Multistage Insertion Formulation and the Pedigree Polytope

4.1 The Multistage Insertion Formulation (MI)

4.2 The Pedigree Polytope

4.3 Conclusion

5 The Pedigree Polytope and the Membership Problem

5.1 The Membership Problem in the Pedigree Polytope

5.2 Finding a Feasible Solution to the MI-relaxation Problem

5.3 Checking the Sucient Condition for X=k 2 conv(Pk)

5.4 Checking the Necessary Condition for X=k + 1 2 conv(Pk+1)

5.5 A Counterexample

5.6 Conclusion

6 The LP Relaxation of Various TSP Models

6.1 The LP Relaxations of the DFJ Formulation

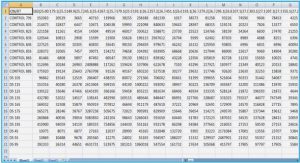

6.2 Computational Results on Solving TSPLIB Instances by Various TSP Formulations

6.3 The TSP Instances by Papadimitriou and Steiglitz

6.4 Conclusion

7 The Branch and Bound Method for the TSP

7.1 A Brief History of the Branch and Bound Method for the TSP

7.2 Relaxations for the TSP

7.3 Branching Methods

7.4 Branch and Bound Method for the MI Formulation

7.5 Computational Results on Branch and Bound for Various TSP Formulations

7.6 Conclusion

8 Some Heuristics Based on the MI Formulation

8.1 The Pedigree Tree

8.2 The Nearest Pedigree Heuristic

8.3 The Pedigree Extraction Heuristic

8.4 The 1-Discordant Local Search

8.5 The 2-Discordant Local Search

8.6 The Pedigree Branch Local Search .

8.7 Computational Results

8.8 Conclusion

9 Conclusions

Bibliography

GET THE COMPLETE PROJECT