Get Complete Project Material File(s) Now! »

Source- lter model vs. acoustic model

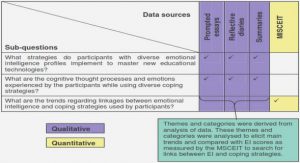

Figure 1.1 depicts a pro le cut of the head to show the various structures of the physiology of voice pro-duction whereas the left part of gure 1.2 emphasizes the links between these elements. The articulators are in blue, the passive structures are in grey and the glottis which is acoustically active is in red like the vocal folds. During the realization of a voiced phoneme, pushed by the air coming from the lungs, the vocal folds vibrate like the lips for a brass instrument. Such a modulation of the ow by the glottis creates an acoustic source which is modi ed according to the resonances and anti-resonances of the vocal-tract due to the shape of this latter. Finally, the acoustic wave radiates outside of the head through the mouth and the nostrils.

In the center of gure 1.2, an acoustic model is depicted [Mae82a, MG76]. In this model, the impedance of the vocal apparatus is represented by area sections and their physical properties all along the structures. The impedance of the larynx is mainly de ned by the glottal area. Using such a model, the forward problem of the voice synthesis can be well approximated by numerical integration of the di erential equations of the associated problem [Mae82a]. Note that, in such a model, the glottal ow (the air ow going through the glottal area) is an implicit variable of the system. Other models, which are not illustrated here, take into account the in uence of the glottal ow on the vocal folds motion [PHA94, ST95]. In this case, the glottal area is an implicit variable which is in uenced by the imposed mechanical properties of the vocal folds.

To the right of gure 1.2, the source- lter model is depicted [Mil59]. This model simpli es the acoustic model using two strong assumptions:

I The modulation of the ow by the glottis is independent of the variations of the vocal-tract impedance (i.e. the glottal source is equal to the glottal ow). The possible coupling between the acoustic source at the glottis level and the vocal-tract impedance is thus constant.

II In the time domain, voice production can be represented by means of convolutions of its elements. Therefore in spectral domain, on can write: S(!) = G(!) C(!) L(!) (1.1) where G(!) is the spectrum of the acoustic excitation at the glottis level, resonances and anti-resonances of the vocal-tract are merged into a single lter C(!) termed Vocal-Tract Filter (VTF) and the radiations at the mouth and nostrils level is merged into a single lter L(!) termed radiation. Such a system is thus linear and its elements can be commuted.

In addition to these two hypotheses, although the in uence of the glottal area on the VTF can be modeled using a time-varying lter, the VTF is usually assumed to be independent of the glottal area variations and stationary during a period of vocal folds vibration.

Regarding the assumptions used in the source- lter model, it is important to compare these two models and their purposes. Indeed, on the one hand, as proposed by the acoustic model, it is interesting to reproduce analytically or numerically the voice production as closely as possible to physical measurements in order to understand and describe it (e.g. understand the mechanical behavior of the vocal folds, study the di erent levels of coupling between the acoustic source and the vocal-tract impedance). On the other hand, one can be interested in the manipulation of the perceived elements of the voice. Therefore, for this latter purpose, modeling all the physical behaviors of voice production can be unnecessary [Lju86] (e.g. if we assume that the couplings can be neglected for this purpose). This perception based point of view partly explains why the source- lter model is widely used for voice transformation and speech synthesis. Since this study matches the same objectives, the source- lter model has been used.

Additionally, to transform a given speech recording, it is necessary to estimate the parameters of the model of the voice production model which is used. Regarding this inverse problem, the source-lter has a strong advantage compared to the acoustic model. The source- lter model is more exible than the interpretation depicted for the voice in gure 1.2. Indeed, the spectrum of any signal can be decomposed in a smooth amplitude envelope and the residual of this envelope. For example, the linear prediction method has not been especially developed for speech [Gol62, Yul27], although this analysis tool has been widely used and its results interpreted in the context of speech [MG76]. Similarly, the minimum/maximum-phase decomposition by means of the complex cepstrum, which can be interpreted as a source- lter separation, has not been developed for voice analysis only but also for radar signals analysis and image processing [OSS68]. Regarding the acoustic model, although it has been shown that the vocal-tract area function can be approximated using linear prediction [Den05, MG76], it has been shown that very di erent vocal-tract area functions can imply very close vocal-tract impedances [Son79]. The inversion of an acoustic model is thus ill-conditioned and remains, currently, an open problem, although it is of great interest to obtain a model which is closer to voice production than the source-lter model. In this study, due to the inversion issues of the acoustic models and the exibility of the source- lter model, we propose a separation scheme of this latter.

The chosen approach: the glottal model

For voice transformation and speech synthesis, most of the existing methods tends to model the voiced signal with a minimum number of a priori about the voice production. For example, although the phase vocoder [FG66] is designed for the voice, it achieves also e cient pitch transposition and time stretching of musical signals [LD99a]. In other methods, the vocal folds vibration is often assumed to be periodic. Therefore, the fundamental frequency is used in current methods (e.g. WBVPM [Bon08], STRAIGHT [KMKd99], HNM [Sty96], PSOLA [MC90, HMC89]). In this study, using more a priori about the voice production is assumed to improve the voice transformation and the speech synthesis quality. Among all the possibilities, this study focuses on an analytical description of the deterministic component of the glottal source, a glottal model.

Regarding the control of voice manipulations, a glottal model should be of great interest. Indeed, regardless of the method, once the voiced signal is modeled, the parameters of the used model have to be modi ed in order to obtain the target e ect (e.g. create a breathy voice from a neutral voice). However, such a link between low-level parameters and high-level voice qualities is not obvious. Therefore, the control of a voice model which uses a glottal model should be easier than using parameters which are close to the signal properties (e.g. frequencies, amplitudes and phases of a sinusoidal model). Indeed, the parameters of a glottal model link its phase and amplitude spectra in a way which respects physical assumptions and constraints. However, the use of a glottal model implies a model of the voice production which is less exible than the simple residual- lter model. Whereas the latter applies to any signal, a glottal model is limited to the signals it can be tted to. Accordingly, in this study, we assume that a glottal model can t the excitation source of the source- lter model. Starting from this assumption, this study mainly intends to answer the three following questions:

1) How to estimate the parameters of a glottal model?

2) How to estimate the Vocal-Tract Filter according to this glottal model?

3) How to transform and synthesize a voiced signal using this glottal model?

Part II of this document tries to answer the rst question and try to party answer the second one using a reduced representation of the VTF. An analysis/synthesis procedure is proposed in part 8.3 which fully represents the VTF and tries to handle the third question.

For voice modeling, glottal models have already been used in ARX/ARMAX based methods [Lju86, Hed84]. However, although this approach has been studied since two decades, no implementations of these methods achieved perennial results as other techniques did in voice transformation and synthesis, mainly because of robustness issues [AR09]. Indeed, the following methods have shown capabilities to model the voice production in various applications: WBVPM for pitch transposition in Vocaloid [Bon08]; STRAIGHT for HMM-based synthesis [YTMK01]; PSOLA and phase vocoder for voice transformation [Roe10, FRR09, LD99b]. Consequently, as assumed in other recent studies [AR08, VRC07, Lu02], the use of a glottal model remains a challenging problem for voice transformation and speech synthesis. Regarding to the robustness issues encountered by ARX/ARMAX based methods, in order to reduce the degrees of freedom of the voice production model used in this study, the shape of the glottal model is controlled by only one parameter. Conversely, this drastically reduces the signals space the proposed methods can be applied to. Increasing the exibility of the proposed solutions should be a next step.

To the best of the author’s knowledge, many studies have already been dedicated to time and spectral properties of glottal models [van03, Hen01] whereas the estimation of the parameters of these models held less attention. Accordingly, we consider that developing the knowledge and the understanding of estimation methods of glottal parameters is of great interest. In this study, based on the minimum and maximum phase properties of the speech signal, various methods are proposed. In addition, the conditions a glottal model has to satisfy in order to obtain reliable estimates of its parameters are discussed for the proposed methods.

Evaluation and validation of the proposed methods

Even though the estimation of glottal parameters is currently an active research eld [DDM+08, VRC05b, Fer04, Lu02], the validation of the corresponding methods is a tricky problem. Indeed, the ground truth of voice production is far from accessible. A measurement of the glottal ow which is usually associated to the source of the source- lter model could be compared to glottal model estimates. However, a measurement of this ow is an equally di cult problem. Moreover, the acoustic coupling between the glottal ow and the vocal-tract make this comparison di cult to establish.

Before computer resources reached a su ciently high level to make robust evaluations possible using large databases [PB09, Str98, DdD97], most of the evaluations are made with a few demonstrative pictures. However, it is di cult to know to which extent those star examples are representative of a general behavior of the presented methods. Consequently, in this study, a synthetic signals database is used to evaluate the e ciency of the proposed methods. Although this approach allows to evaluate the theoretical limits of the methods, it does not evaluate the methods robustness and the capability of the used voice model to t an observed signal. Consequently, ElectroGlottoGraphic (EGG) signals are used in this study to create references which are compared to estimated glottal parameters. In current literature, using preference tests, the evaluation of estimation methods is often avoided by evaluating the transformation and synthesis capabilities of procedures using these estimation methods [Lu02];[Yos02, KMKd99];[RSP+08, Alk92]. In this study, this approach is also considered using the analysis/synthesis method presented in chapter 9.

Structure of the document

Part I: Voice production and its models

The vocal apparatus is described in this part with the necessary details in order to review the usual simpli cations and hypothesis made by the source- lter model. However, given the interdisciplinary nature of this part, one could dedicate a whole study for each of its points of view: the physiology of the structures, the acoustic models, the perception of the voice, etc. Therefore, given the purpose of this study, the descriptions given in this part are assumed to be su cient in order to establish the voice production model which is used in the two following parts. Chapter 2 describes the excitation source which is ltered by the elements presented in chapter 3.

Part II: Analysis

The second part rst discusses in chapter 4 the separation problem, its related hypothesis and its state of the art. In the following chapters, various estimation methods of glottal parameters are presented with their related operating conditions listed. Finally, chapter 8 deals with the evaluation of those methods using synthetic and EGG signals.

Part III: Voice transformation and speech synthesis

Glottal source and Vocal-tract separation can be used in many applications: voice transformation, speech synthesis, identity conversion, speaker recognition, clinical diagnosis, a ect classi cation, etc. This part presents an analysis/synthesis method in chapter 9 which uses a glottal model in order to properly separate the glottal source and the vocal-tract lter. Chapter 10 evaluates the e ciency of this method in the context of pitch transposition, breathiness modi cation and speech synthesis.

All chapters end with a list of conclusive remarks and the last chapter 11 ends this document discussing the contributions of this work and adds remarks for future directions.

The glottal source

Anything which can vibrate in the vocal apparatus is a possible acoustic source: the vocal folds, the velum and or uvula (snoring), the tongue (alveolar trill /r/), ventricular folds (Harsh voice in Metal music), aryepiglottic folds [EMCB08], etc. However, we will consider in this study only the vibration of the vocal folds since it is the source of the voiced phonemes on which voice quality depends.

Vocal folds & glottal area

The larynx contains the cartilages and the muscles which control the mechanical properties, the length, the tension, the thickness and the vibrating mass of the vocal folds. Figure 2.1(a) shows a diagram of a vertical cut of the larynx whereas gure 2.1(b) shows an in vivo image of the vocal folds taken downward from the pharynx using High-Speed Videoendoscopy (HSV). Pushed by the air coming from the lungs, the vocal folds vibrate. Most of the time, this vibration is periodic because the vocal folds motion is maintained in a self sustained mechanism. The resulting motion is thus not neurologically controlled. For a standard male voice, the fundamental frequency f0 of this vibration is around 120Hz whereas 200Hz corresponds more to female voices and 400Hz is easily reached by a child.

Using High-Speed Videoendoscopy (HSV) it is possible to record the vocal folds motion (see g. (b)). The area of the glottis can be then estimated on each image of such a recording (see Appendix C for the method that we propose). During this study, we used a HSV camera to make 183 recordings with synchronized ElectroGlottoGraphic (EGG) signal and acoustic waveforms [DBR08]. Although we recorded healthy speakers and mainly singers, gure 2.2 shows the diversity of glottal area functions which can exist. Examples (a) and (b) show that the glottal area can be skewed to the left as well as to the right (also shown in [SKA09]). Additionally, example (f) shows that it is possible that ripples appear on the glottal area. Note that, simulations of the glottal ow with the Maeda’s model [Mae82a] (see appendix D) can show a glottal ow which is also skewed to these both directions.

Bernoulli’s e ect

When the air passes through the vocal folds, its speed is larger than when released in the space above the glottis. A Bernoulli’s e ect is thus created and the vocal folds motion can be in uenced by this pressure di erence (Level 2 interaction in [Tit08]). From the point of view of the source- lter model, we assume that this e ect is included in the characteristics of the glottal source (i.e. according to our objectives, it is not necessary to separate this e ect from the source).

The vocal folds are made of di erent layers, mainly the body and the cover (see g. 2.1(a)). Depending on the muscles tension, the cover can vibrate without a vibration of the main body. Moreover, the folds have a certain thickness. Therefore, the upper edge can vibrate without vibration of the lower edge. These di erences of vibration imply laryngeal mechanisms which exclude each other. Through this exclusion, changing from one mechanism to another creates a pitch discontinuity in non trained singers [RHC09, Hen01]. Follows, a brief description of the usual laryngeal mechanisms [RHC09].

Mechanism 1, known as Modal voice or Chest voice

In this mechanism, both body and cover of the vocal folds vibrates. The usual fundamental fre-quency is about 120 Hz for a male speaker and about 180 Hz for a female speaker. The closed duration of the glottis represent an important part of the fundamental period (e.g. g. 2.2(b)). This is the most used mechanism in speech.

Samples: middle of USC08.50.*, end of USC08.16.*

Mechanism 2, known as Falsetto voice, Soft voice or Head voice

The larynx muscles make the vocal folds thinner than in mechanism 1. Consequently, on each vocal-fold, the upper edge vibrates while the lower edge remains xed. Additionally, whereas the cover move, the body is assumed to be xed. The fundamental frequency can be easily twice as in mechanism 1. The closed duration can be di cult to de ne on a HSV recording but the open duration is clearly bigger than in mechanism 1 (e.g. g. 2.2(d)). This mechanism is mainly used by children and often used by female speakers.

Samples: USC08.65.*, start of USC08.16.*

Whistle voice, (sometimes termed Mechanism 3 )

The physiological and mechanical descriptions of this mechanism is not yet described as well as the two previous ones and seems to be fairly similar to the mechanism 2. The fundamental frequency is usually above 1000 Hz. Conversely to the two previous mechanisms, this mechanism is never used is speech. Soprano singers can use this mechanism as well as young children to shout.

Vocal fry, (sometimes termed Mechanism 0 or Creaky voice)

In this mechanism, the vocal folds motion is irregular and create a popping of an average frequency between 10 50 Hz (e.g. g. 2.2(f)). Due to this irregularity, a pitch is not properly perceived. This mechanism is frequent in speech and often appears at the end of sentences of English voice, when the lungs pressure slacken. Note that a lot of variations of this mechanism exist: exhaled, inhaled, alternated.

Samples: exhaled fry USC07.62.*, inhaled fry USC07.61.*, end of snd_arctic_bdl.1.wav

For all laryngeal mechanisms, various voice qualities exist. For instance as depicted in gure 2.2(a,b,c) for mechanism 1, the larynx can vary between lax and tense states (see USC08.50.* 0:65 < t < 1:80 s). Additionally, aspiration noise can be generated through a leakage at the posterior side of the glottis which is created by the abduction of the vocal-folds (see sample USC08.60.* t = 1:25 s and section 2.6 for its description). For the following, we de ne a voice quality axis termed breathy-tense axis which is relevant for speech. This axis is made of a breathy voice at one side, a lax voice with signi cant aspiration noise. Then, by the tightening of the vocal folds, the aspiration noise is reduced and, at high frequencies, the deterministic source is increased and thus more easily perceived, leading nally to the tense voice at the other side of this axis.

Glottal ow vs. Glottal source

As already seen in the introduction, one of the main di erence between the acoustic model and the source- lter model is related to the modeling of the source of voice production. Therefore, in this section, the glottal source of the source- lter model is described and its relations to the glottal ow are discussed. The glottal ow is the air ow coming from the lungs which is modulated by the glottal area. First, compared to the glottal area function, the glottal ow is skewed toward positive time [Tit08, CW94, Fla72a]. Therefore, it is not possible to express the glottal ow with an instantaneous function as ow(t) = f(area(t)) where f(x) would be independent of t. Secondly, the glottal ow is a non-linear function of the glottal area and the sub-glottal pressure [Fla72a]. Therefore, from these two points, the band limit of the glottal ow is higher than that of the glottal area. Thirdly, the glottal ow is dependent on the vocal-tract shape since this latter de nes the acoustic load above the glottis (described as Level 1 interaction in [Tit08]). Figure 2.3 shows simulation of glottal ow for various phonemes and various subglottal pressures from a measured glottal area on a HSV recording. A clear skewness e ect of the ow is shown with the phoneme / E/. Additionally, one can see ripples on the ascending branch of the pulses which vary signi cantly between the phonemes.

If we assume that the relation between the glottal ow and the ow at the output of the vocal apparatus is linear, the real glottal source of the source- lter model is the glottal ow as described above and illustrated in gure 2.3. Consequently, if we use a glottal source which is de ned as independent of the vocal-tract properties, we have to assume the following points: 1) the pulse skewness and the non-linear relation with the glottal area are equal for all vocal-tract shape. 2) The ripples of the glottal ow which depend on the vocal-tract impedance have to be neglected. Accordingly, considering a source- lter model, we de ne in this study the glottal source as a non-interactive signal description of the voice source which is thus independent of the variations Many non-interactive glottal models have been proposed to de ne analytically one period of the glottal ow [DdH06, CC95]. In the following, these models will be used to describe the deterministic component of the glottal source. As shown in gure 2.4, existing models use mainly a set of time instants (known as T parameters [Vel98]):

ts Time of the start of the pulse (for the following de nitions, we assume ts = 0).1

ti Time of the maximum of the time-derivative.

tp Time of the maximum of the pulse. This maximum is termed the voicing amplitude Av.

te Time of the minimum of the time-derivative.

ta The return phase duration.

tc Time of the end of the pulse.

T0 Duration of the period T0 = 1=f0

Then, each glottal model g(t) de nes a set of analytical curves passing through these points. Although most of the glottal models are mainly de ned in time domain, it has been shown that their amplitude spectrum jG(!)j can be stylized as in right plots of gure 2.4 [DdH06]. In the following list, we brie y describe the most known and used glottal models:

Rosenberg [Ros71]

Initially, Rosenberg proposed 6 di erent models to t a glottal pulse estimated by inverse ltering (see sec. 4.4). In [Ros71], a preference test has been used to select the model which sounds as the best source. The best model, the Rosenberg-B model, is thus referred as the Rosenberg model.

Table of contents :

Abstract & Resumé

Notations, acronyms and usual expressions

1 Introduction

1.1 Problematics

1.1.1 Source-lter model vs. acoustic model

1.1.2 The chosen approach: the glottal model

1.1.3 Evaluation and validation of the proposed methods

1.2 Structure of the document

I Voice production and its model

2 The glottal source

2.1 Vocal folds & glottal area

2.2 Laryngeal mechanisms & voice quality

2.3 Glottal ow vs. Glottal source

2.4 Glottal models

2.5 Time and spectral characteristics of the glottal pulse

2.5.1 Time properties: glottal instants and shape parameters

2.5.2 Spectral properties: glottal formant and spectral tilt

2.5.3 Mixed-phase property of the glottal source

2.5.4 Vocal folds asymmetry, pulse scattering and limited band glottal model

2.6 Aspiration noise

Conclusions

3 Filtering elements and voice production model

3.1 The Vocal-Tract Filter (VTF)

3.1.1 Structures of the vocal-tract

3.1.2 Minimum-phase hypothesis

3.2 Lips and nostrils radiation

3.3 The complete voice production model

Conclusions

II Voice analysis

4 Source-lter separation

4.1 General forms of the vocal-tract lter and the glottal source

4.2 Estimation of glottal parameters

4.3 Spectral envelope models

4.4 The state of the art

4.4.1 Analysis-by-Synthesis

4.4.2 Pre-emphasis

4.4.3 Closed-phase analysis

4.4.4 Pole or pole-zero models with exogenous input (ARX/ARMAX)

4.4.5 Minimum/Maximum-phase decomposition, complex cepstrum and ZZT

4.4.6 Inverse ltering quality assessment

Conclusions

5 Joint estimation of the pulse shape and its position

5.1 Phase minimization

5.1.1 Conditions of convergence

5.1.2 Measure of condence

5.1.3 Polarity

5.1.4 The iterative algorithm using MSP

5.2 Dierence operator for phase distortion measure

5.2.1 The method using MSPD

5.3 Estimation of multiple shape parameters

5.3.1 Oq/m vs. Iq/Aq

Conclusions

6 Estimation of the shape parameter without pulse position

6.1 Parameter estimation using the 2nd order phase diérence

6.1.1 The method based on MSPD2

6.2 Parameter estimation using function of phase-distortion

6.2.1 The method FPD1 based on FPD inversion

6.2.2 Conditioning of the FPD inversion

Conclusions

7 Estimation of the Glottal Closure Instant

7.1 The minimum of the radiated glottal source

7.2 The method using a glottal shape estimate

7.2.1 Estimation of a GCI in one period

7.2.2 Estimation of GCIs in a complete utterance

7.3 Evaluation of the error related to the shape parameter

Conclusions

8 Evaluation of the proposed estimation methods

8.1 Evaluation with synthetic signals

8.1.1 Error related to the fundamental frequency

8.1.2 Error related to the noise levels

8.2 Comparison with electroglottographic signals

8.2.1 Evaluation of GCI estimates

8.2.2 Evaluation of the shape parameter estimate

8.3 Examples of Rd estimates on real signals

Conclusions

III Voice transformation and speech synthesis

9 Analysis/synthesis method

9.1 Methods for voice transformation and speech synthesis

9.2 Choice of approach for the proposed method

9.3 The analysis step: estimation of the SVLN parameters

9.3.1 The parameters of the deterministic source: f0, Rd, Ee

9.3.2 The parameter of the random source: g

9.3.3 The estimation of the vocal-tract lter

9.4 The synthesis step using SVLN parameters

9.4.1 Segment position and duration

9.4.2 The noise component: ltering, modulation and windowing

9.4.3 Glottal pulse and ltering elements

Conclusions

10 Evaluation of the SVLN method

10.1 Inuence of the estimated parameters on the SVLN results

10.2 Preference tests for pitch transposition

10.3 Evaluation of breathiness modication

10.4 Speech synthesis based on Hidden Markov Models (HMM)

Conclusions

11 General conclusions

11.1 Future directions

A Minimum, zero and maximum phase signals

A.1 The real cepstrum and the zero and minimum phase realizations

A.2 Generalized realization

B Error minimization and glottal parameters estimation

B.1 Error minimization

B.2 Parameter estimation based on glottal source estimate

B.2.1 Procedure for the Iterative Adaptive Inverse Filtering (IAIF)

B.2.2 Procedure for the Complex Cepstrum (CC) and the Zeros of the Z-Transform (ZZT)

C The glottal area estimation method

C.1 The proposed method for glottal area estimation

C.2 Spectral aliasing

C.3 Equipment

D Maeda’s voice synthesizer

D.1 Space and time sampling

D.2 Minimum-phase property of the vocal-tract

Bibliography

Publications during the study