Get Complete Project Material File(s) Now! »

EXISTING SECURITY MECHANISMS AND THEIR LIMITATIONS

In practice, security relies on the definition and the deployment of a security policy. This one specifies security rules and requirements to be satisfied by an information system. These rules precise how information can and can not be accessed, the procedures of recovery management, new user registration and also how security services must be deployed/configured/parameter-ized, etc. Thus, the deployment of a security policy is a complex process. Nowadays, different standards exist to guide this process, the most recent like BS 7799-2 or ISO 27001 being based on the principle of continual improvement of the security, known as the model PDCA (Plan, Do, Check, Act).

The first step of the deployment of a security policy is a risk analysis, by means for example of the standard EBIOS [DCSSI, 2010] or MAHARI [CLUSIF, 2010]. This risk analysis is part of a process that allows the identification of the objectives and requirements of security that an information system must achieve. In order to counter/minimize the identified risks, existing protection tools and devices are then deployed. Protection means can be classified into physical and logical mechanisms. Physical protection mechanisms concern the materials and essentially aim at counteracting non-authorized physical access, robbery and natural risks such as fire, flooding, etc. In theory, an information system should be placed in a protected zone, isolated where the access is controlled. Anti-theft mechanisms should also be deployed, such as cable locks.

In addition to these mechanisms, maintenance safeguards the proper functioning of the information system in terms of hardware and software. Even though it depends of the security policy, as exposed above, it is usually provided by external service societies contractually linked to the health institution. These maintenance contracts take usually into account the constraints in terms of confidentiality, integrity and availability of information.

In practice, the efficiency of physical protection is arguable, as health establishments are open structures in which patients are mixed with health professionals and there exists an easy access to equipments. This is why logical (i.e., computer-based) security mechanisms are important. In order to have a more comprehensive view of the existing logical security mechanisms for databases, we propose to present them depending on the security objectives they answer to (see Sect. 1.1.2).

DIFFERENCES BETWEEN MULTIMEDIA AND RELATIONAL CONTENTS

Although watermarking appears as a promising complementary tool for database security, the application of existing signal or image watermarking techniques is not a straightforward process. Relational databases differ from multimedia contents in several aspects that must be taken into account when developping a new watermarking scheme.

One of the main differences is that samples in a multimedia signal (e.g., pixels in an image) are sorted into a specific order, in a temporal (e.g., audio signal) and spacial domain (e.g., image or video), that gives a sense of the content itself to the user. Close samples are strongly correlated with usually an important information redundancy at a local level, i.e., between close samples or pixels. This is not the case of relational databases, the purpose of which is to provide an efficient storage of independent elements within a common structure. Thus, tuples in a relation are not stored in any specific order. At the same time, because tuples can be stored and reorganized in many ways in a relation without impacting the database content, the question arises of the synchronization between the insertion and the reading processes. Indeed, with signals or images, one can count on their intrinsic structure, working for instance on blocks or groups of consecutive samples. The same strategy is not so easy to apply in the case of relational databases where tuples can be displaced, added and removed. The identification of the watermarked elements of the database and consequently, the synchronization between the insertion and the detection stages, arise as an issue to be solved.

The frequency and nature of manipulations over the data are also different. In the multime-dia case, filtering and compression operations are common. They modify the signal samples’ values but don’t change the signal structure (a filtered image will be close to its original version). In databases, insertion and deletion of tuples are frequent. They can be seen as subsampling and oversampling operations but with an irregular distribution, a quite rare sit-uation in signal processing, especially if the process output should keep an image structure. Moreover, databases may be queried so as to extract a part of the information that presents an interest to the user.

Beyond the database structure and manipulation, one must also consider that the informa-tion contained in a database may come from different sources, such as different services in a hospital. Hence, values in the database are very heterogeneous while having a semantic logic. We may find numerical and categorical data, images, etc. This is not the case in multimedia signals, where all the samples are numerical with the same dynamic. As we will see in chapter 4, this results in a limitation of the watermark perturbations so as to preserve the meaningful value of the database. Finally, multimedia watermarking is based on perceptual models, such as psychovisual phenomena in the case of images or video watermarking, that take advantage from human perception defects. These models allow watermark embedding into regions less perceivable

to the user. In the case of databases, the different options for querying information also complicate the construction of such a model and impose authors to search more appropriated approaches, based on data statistics or semantic links, as example.

INDEPENDENCE OF THE INSERTION/READER SYNCHRONIZATION FROM THE DATABASE STRUCTURE

As exposed in the previous section, contrarily to image or signal where pixels or samples, respectively, are ordered, the structure of a database, i.e., the way tuples and attributes are organized, is not so much constraint. This is one of the key issues to consider in database watermarking. Indeed, if for image and video, the insertion and watermark extraction processes take advantage of data organization, being synchronized through groups of samples or blocks of pixels, this question is largely open in the case of databases. A DBMS can reorganize the tuples in the way it wants, and this will not change the content or impact the value of the database. To overcome this issue, in order to make the watermark insertion/reading independent of the database structure or the way this one is stored, that is to say ensuring a correct synchronization between the embedding and the detection processes, several methods have been considered.

The first approach considered by Agrawal et Kiernan [Agrawal and Kiernan, 2002] consists in secretly constituting two groups of tuples based on a secret key. One of the groups contains the tuples to be watermarked. In order to obtain the group index of a tuple tu in the relation Ri, they make use of a HASH function modulo a parameter γ ∈ N which controls the number of tuples to modify. If we define tu.P K as the primary key of a tuple, KS the secret key, mod the modulo operation and || the concatenation operation, the condition HASH(KS||HASH(tu.P K||KS)) mod γ = 0 indicates if the tuple must be watermarked or not. In [Agrawal et al., 2003a] and [Agrawal et al., 2003b], the HASH operation is replaced by a pseudo-random generator initialized with the primary key of the tuple concatenated with the secret key. Notice that this method allows embedding a message of one bit only. This consequently restricts the range of possible applications. In order to increase the capacity, Li et al. [Li et al., 2005] proposed an evolution of the previous method in which one bit of message is embedded per selected tuple. This allows the insertion of a multibit watermark, offering more applicative options.

A more advanced solution consists in a “tuple grouping operation » which outputs a set of Ng non-intersecting groups of tuples {Gi}i=1,…,Ng . This allows to spread each bit of the watermark over several tuples, increasing the robustness against tuple deletion or insertion.

The first strategy proposed in [Sion et al., 2004] is based in the existence of special tuples called “markers” which serve as a boundary between groups. First of all, tuples are ordered according to the result of a cryptographic HASH operation applied to the most significant bits (MSB) of the tuples attributes concatenated to a secret key KS as HASH(KS||M SB||KS). Then, tuples for which H(KS||tu.P K) mod e = 0 are chosen as group markers, where e is a parameter that fixes the size of groups. A group corresponds to the tuples between two group markers. This approach suffers from an important inconvenient as a deletion of some of the markers will induce a big loss of watermark bits. To overcome this issue, the most common strategy consists in calculating the group index number nu ∈ [0, Ng − 1] of tu as in eq.(1.2) [Shehab et al., 2008]. The use of a cryptographic hash function, such as the Secure Hash Algorithm (SHA), ensures the secure partitioning and the equal distribution of tuples into groups. nu = H(KS||H(KS||tu.P K)) mod Ng (1.2)

One bit or symbol of the message is then embedded per group of tuples by modulating or modifying the values of one or several attributes according to the rules of the retained water-marking modulation (e.g., modifying the attribute’s statistics as in [Sion et al., 2004] or the tuple order as in [Li et al., 2004]). Thus, with Ng groups, the inserted message corresponds to a sequence of Ng symbols S = {si}i=1,…,Ng Watermark reading works in a similar way. Tuples are first reorganized in Ng groups. From each group, one message symbol is detected or/and extracted depending on the exploited modulation. We come back on these aspects in Section 2.3.2. It is obvious that with such an approach, tuple primary keys should not be modified.

Some other approaches that do not make use of the primary key for group construction have also been proposed. Shehab et al. [Shehab et al., 2008] propose to base group creation on the most significant bits (MSB) of the attributes. The main disadvantage of this strategy stands on the fact groups can have very different sizes as MSB may not have a uniform distribution. A strategy based on k-means clustering has been proposed by Huang et al. [Huang et al., 2009]. In this case, the secret key KS contains the initial position of cluster centers. However, this approach strongly depends on the database statistics which can be easily modified by attacks.

MEASURING DATABASE DISTORTION

As for any data protected by means of watermarking, one fundamental aspect is the preser-vation of the informative value of the database after the embedding process. The watermark should be invisible to the user, it should not interfere in the posterior uses of the database and should preserve the database integrity and coherence. Thus, it is necessary to be capable of evaluating and controlling the introduced distortion. Today, the distortion introduced in the database should be evaluated in two manners: statistically and semantically.

Most of the statistical distortion evaluation criteria are issued from database privacy protection, in which tuples are modified so as to make it impossible to distinguish an individual among a group of records (see Sect. 1.2.3). In this section we expose only those directly applicable to watermarking. Usually, it is supposed that the database will undergo some a posteriori data mining oper-ations. Some measures have been propose in the case the specific operation (e.g., clustering, classification, etc.) is a priori known. These measures are referred as “special purpose metrics”, in opposition to general-purpose metrics. For the latter, the simplest measure is the minimal distortion metric (MD) firstly introduced by Samarati [Samarati, 2001]. For each suppressed or modified record a penalty is added up.

Table of contents :

Résumé en français

Introduction

1 Health Databases Security and Watermarking fundamentals

1.1 Health Information

1.1.1 Health information definition

1.1.2 Security needs in health care

1.2 Relational Databases and Security

1.2.1 Fundamental notions of the relational model

1.2.2 Relational database operations

1.2.2.1 Update operations

1.2.2.2 Query operations

1.2.3 Existing security mechanisms and their limitations

1.2.3.1 Confidentiality

1.2.3.2 Integrity and authenticity

1.2.3.3 Availability

1.2.3.4 Traceability

1.2.3.5 Limitations

1.3 Watermarking as a complementary security mechanism in Databases

1.3.1 Fundamentals of Watermarking

1.3.1.1 Definition

1.3.1.2 How does watermarking work?

1.3.1.3 Watermarking applications

1.3.1.4 Watermarking system properties

1.3.2 Database Watermarking

1.3.2.1 Differences between multimedia and relational contents .

1.3.2.2 Independence of the insertion/reader synchronization from the database structure

1.3.2.3 Measuring database distortion

1.3.2.4 Overview of existing methods

1.4 Conclusion

2 Lossless Database Watermarking

2.1 Applications of lossless watermarking for medical databases

2.1.1 Health Databases protection

2.1.1.1 Reliability Control

2.1.1.2 Database traceability

2.1.2 Insertion of meta-data

2.2 Overview of existing lossless methods in database watermarking

2.3 Proposed lossless schemes

2.3.1 Circular histogram watermarking

2.3.2 Fragile and robust database watermarking schemes

2.3.3 Linear histogram modification

2.3.4 Theoretical performances

2.3.4.1 Capacity Performance

2.3.4.2 Robustness Performance

2.3.5 Experimental results

2.3.5.1 Experimental Dataset

2.3.5.2 Capacity Results

2.3.5.3 Robustness Results

2.3.6 Comparison with recent robust lossless watermarking methods

2.3.7 Discussion

2.3.7.1 Security of the embedded watermark

2.3.7.2 False positives

2.4 Conclusion

3 Traceability of medical databases in shared data warehouses

3.1 Medical shared data warehouse scenario

3.2 Theoretical analysis

3.2.1 Statistical distribution of the mixture of two databases

3.2.2 Extension to several databases

3.2.3 Effect on the detection

3.3 Identification of a database in a mixture

3.3.1 Anti-collusion codes

3.3.2 Detection optimization based on Soft decoding and informed decoder .

3.3.2.1 Soft-decision based detection

3.3.2.2 Informed decoder

3.4 Experimental Results

3.4.1 Correlation-based detection performance

3.4.2 Proposed detectors performance

3.5 Conclusion

4 Semantic distortion control

4.1 Overview of distortion control methods

4.2 Semantic knowledge

4.2.1 Information representation models in healthcare

4.2.2 Definition of ontology

4.2.3 Components of an ontology

4.2.4 Existing applications of ontologies

4.2.5 How are relational database and ontology models represented?

4.3 Ontology guided distortion control

4.3.1 Relational databases and ontologies

4.3.2 Identification of the limit of numerical attribute distortion

4.3.3 Extension of the proposed approach to categorical attributes

4.4 Application to robust watermarking

4.4.1 QIM Watermarking and signals

4.4.2 Modified Circular QIM watermarking

4.4.2.1 Construction of the codebooks

4.4.2.2 Message embedding and detection

4.4.2.3 Linear histogram modification

4.4.3 Theoretical robustness performance

4.4.3.1 Deletion Attack

4.4.3.2 Insertion Attack

4.4.4 Experimental results

4.4.4.1 Experimental dataset

4.4.4.2 Domain Ontology

4.4.4.3 Performance criteria

4.4.4.4 Statistical Distortion Results

4.4.4.5 Robustness Results

4.4.4.6 Computation Time

4.4.5 Performance comparison results with state of art methods

4.4.5.1 Attribute P.D.F preservation

4.4.5.2 Robustness

4.4.5.3 Complexity

4.5 Conclusion

5 Expert validation of watermarked data quality

5.1 Subjective perception of the watermark

5.1.1 Perception vs Acceptability

5.1.2 Incoherent and unlikely information

5.2 Watermarking scheme

5.3 Protocol

5.3.1 Blind test

5.3.1.1 Proposed questionnaire

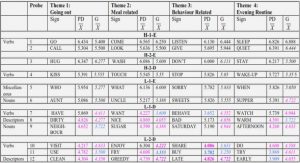

5.3.1.2 Experimental plan

5.3.2 Informed test

5.3.2.1 Proposed questionnaire

5.3.2.2 Experimental plan

5.3.3 Bias in the study

5.3.3.1 Test representativeness

5.3.3.2 Evaluator understanding of the problematic

5.3.3.3 A priori knowledge of the possible presence of a watermark .

5.3.3.4 Quest for a watermark vs database content interpretation .

5.4 Experimental Results

5.4.1 Presentation of the evaluator

5.4.2 Blind test

5.4.2.1 Experiment duration issue

5.4.2.2 Responses analysis

5.4.3 Informed test

5.5 Conclusion

6 Conclusion and Perspectives

A Truncated Normal distribution

B Impact of database mixtures on the watermark: Additional calculation

B.1 Calculation of 2 s,W

B.2 Vector rotations

C Neyman-Pearson Lemma

D Calculation of the detection scores and thresholds

D.1 Soft-decision based detection

D.2 Informed detection . .